Steem Developer Update (Graphene 2.0)

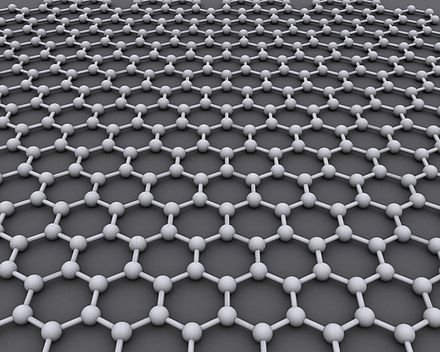

Image created by AlexanderAlUS under

CC By-SA 3.0

This update is for those who are interested in what our team is working on behind the scenes and to let the community know what to expect in the next couple of weeks. We are really excited about what some of these updates mean for Steem and blockchain technology in general.

Introducing Graphene 2.0

Graphene is the underlying database technology that powers many different blockchains (Steem, Bitshares, Golos, etc). Graphene 1.0 was groundbreaking in its ability to process hundreds of thousands of transactions per second. It is extremely developer friendly and enabled the development of Steem in just a couple of months. Graphene 2.0 is a significant overhaul on this backend technology that is aimed at helping platforms like steemit.com scale in an secure and economical manner.

Adopting Memory Mapped File for Storage

Under Graphene 2.0 all blockchain consensus state will be maintained in a memory mapped file that can be shared among multiple processes. This means application state is effectively “on disk” and the operating system will handle paging data to/from disk as needed. As the blockchain memory requirements grow this will provide many huge benefits:

- Faster load and exit times

- Parallel access to the database

- More robust against crashes

- Less frequent database corruption

- Instant “snapshotting” of entire state

- Serve more RPC requests from the same memory

Problems with Graphene 1.0

Graphene is designed to keep all blockchain consensus state in memory using what is arguably one of the highest performance in memory data structures (Boost Multi-index Containers). For traditional cryptocurrencies this approach scales very well because the application state (account balances) is relatively small relative to the transactional throughput (balance transfers and trades).

Steem has a much larger application state than any other cryptocurrency. This state includes all of the article content, feed lists, and votes. Additionally, this state is queried by thousands of passive readers who are interested in browsing blockchain explorers like steemit.com.

Steem is currently the second largest blockchain measured by transactions-per-second. Only Bitcoin is processing more transactions than Steem. The Steem consensus state is growing faster than any other blockchain because almost every operation adds more state than it consumes (especially for full nodes serving steemit.com).

Currently the Steem nodes that power steemit.com consume over 14 GB of RAM and this number is growing at a rapid rate. Every time we want to add a new feature it usually means increasing the amount of RAM required.

Slow Exit and Load Times

When a full node starts up it must process and index many gigabytes of data. This process currently takes 10s of seconds when there are no problems. If there are any problems detected loading the saved state, then the entire blockchain must be processed to regenerate the state from the history of transactions. This blockchain replay process can take over 5 minutes on even the fastest machines.

When a full node shuts down, it must save all of this data to disk. This can also take 10s of seconds. If anything goes wrong while saving then the next time the database is loaded will require a full 5+ minute replay of the blockchain.

Single Threaded Bottleneck limits Connections

Graphene was designed to be single threaded for performance reasons. The very nature of blockchain technology requires a deterministic generation of consensus state which means a definite sequential order of operations for everything that impacts shared state. The overhead of multithreaded synchronization is greater than any benefits we might gain.

In a normal blockchain environment this is perfectly OK, but Steem isn’t your normal blockchain. Our Steem nodes are processing requests from thousands of clients every second. Each of these requests must be proxied to the thread that is allowed to read and write to the database. To make a long story short, each Steem node is only able to process about 150 simultaneous connections before users start experiencing a degradation in website performance.

In order to maintain good performance for all users, steemit.com runs many instances of the Steem node and load balances requests among those instances. Each of these instances requires another 14 GB of RAM (and growing).

Software Crashing is Expensive to Recover

Any software bugs that cause an unexpected crash will result in a corrupt application state. When a node crashes it can take minutes to recover while maxing out a CPU core.

Any process that is servicing requests from users is at greater risk of software bugs and crashes because these processes change more frequently than the core consensus logic.

API versioning

Anytime we upgrade our API it requires us to run a full node. Supporting multiple versions of our API in parallel requires significant resources. Under Graphene 2.0 multiple APIs can share the same shared database and can be started and stopped at will.

Better Access Control

It is now possible to serve all of our blockchain database queries from a process that has mapped the database in READ-ONLY mode. This means the operating system will enforce that no API call can inadvertently corrupt the state of the blockchain consensus database.

Parallel Network Protocols

Under the new model we can separate the P2P networking code from the core database code and logic. This separation will allow us to add multiple networking protocols in parallel while maintaining an operating system enforced firewall between publicly facing network code and the core blockchain validation logic.

This will allow us to start, stop, and restart the P2P networking infrastructure without having to restart the entire blockchain database.

Summary

Graphene 2.0 will involve significant updates to the very core of Steem and will take us a couple of weeks to implement and test. Because this update is so far-reaching all other new blockchain features will be put on hold until this migration is complete. There will be an extensive period of testing where old and new versions of Steem will be running side by side to ensure we do not accidentally introduce a consensus changing unexpected hard-fork.

After the migration to Graphene 2.0 is complete, we will return our attention to Curation Guilds.

It seems that this update is specifically for steemit. Not bad, but a little sad for Bitshares. I really want to ask CNX, will they update BTS to Graphene 2.0 too?

LOL!!

Oh boy, I wasn't expecting this at all, just...amazing!

Loving your GIF by the way! ;) the image is from one of my very favorite movies of all time... Namaste :)

Few questions:

@ned mentioned on steemspeak, that steemit team is using/is going to use Scrum. Are you going to use new feature of github - scrumboards which in github are now called projects. I already noticed test board created: https://github.com/steemit/steemit.com/projects/1

What is ETA for migration to Graphene 2.0, how you are going to split this huge task to smaller stories?

I heard that 2nd voting period (30 days) is not longer because of memory. I guess that with Memory Mapped File size of those data should not be so big problem... so is it possible that third voting period (like.. 1 year) will be introduced?

I feel its very important for the real quality of steemit posts to flourish that there needs to be a long term incentive for continual value of posts.

ITs important to design incentive for people to uproot "old" content and still have the author get compensated for that. This will inspire much more in depth content and bigger scope projects of development and discourage the quick post to keep active and get more quick votes.

the 30days issue will solve definitly many problems i see like in @pharesim project pevo, and other content ...

funny... yesterday I read whitepaper of his project ;) It's a small world :)

Will all the tools and libraries developed so far ( @furion's, @xeroc's libs and others) be backward compatible or will need to be rewritten to support 2.0?

Excellent question! I haven't seen anything about backwards compatibility in the post except the part about API versioning. Will everyone tapping into the current blockchain need to upgrade too?

To me it seems they basucally changed the way they operate the blockchain as a service and not the blockchain as a consensus database. Unless i am missing something and they forget to add a legacy api, there shouldnt be much of a problem .. except more work for devs

This is a big deal! My sole request:

Hi @steemitblog, may I translate this article into Spanish? The idea and guidelines would follow this:

TRANSLATE ANY OF STELLABELLE'S POSTS & KEEP ALL REWARDS: Translation Opportunities For Foriegn Language

TRANSLATION OPPORTUNITY ON STEEMIT – Building Our Community and Reaching Out to Others While Earning STEEM

Thanks!

Go for it!

Thank you for allowing us to continue the progress of steemit with this translation effort.

I think that there is a way for this to be a huge benefit to the community and am working on a development post for it right now, which I'll put up later.

Thanks again!

Thank you @dantheman!

Spanish translation:

Actualización del Desarrollador de Steem (Graphene 2.0)

Here is a post about how stellabelle and I are moving forward to break through the language barrier and invest in the international steemit community long-term

May I ask what your thoughts are on the matter?

Although I don't have the answer for you, my guess is that they would be happy if you translated it to Spanish, as that would mean more people could understand it. This account is run by the founders of Steemit Inc.

Thanks @stellabelle. I would prefer if @dantheman @ned or @steemitblog could provide an explicit authorization because I risk being flagged by other spanish speaking steemians if I don't count with an authorization.

I'll be patient and await for an answer, also each new post from @ned or @dantheman is an opportunity to try an ask again :o)

YEAH, smart move. Do you translate books, too?

I'm more focused on near real time translations at the moment, @stellabelle. Anyway it depends on the wordcount (a 30k words e-book is not the same as a 300k+ words book).

Excellent! Is there anything we can do as a community to help test? Also curious of your thoughts about posts like this earning rewards. I'd like it to be voted up for visibility, but paying steemitblog from the reward pool seems odd given how much steem the organization behind steemit currently has.

IMO they totally deserve that ;)

It would be great feature to be able to redirect steem rewards from posts with one input box during publication. Then... it would be possible to donate everything to @null .

I thought the last fork release had a mechanism to have non-paying posts? That would be better than decreasing the reward pool for everyone else and distributing to null, IMO.

I agree, those developing this should be hansomely rewarded, but there's just so much criticism out there already that forgoing more payouts would go a long way from a PR perspective. They gain so much new STEEM via share dilution protection due to their massive VEST holdings that they have plenty to last them a very long time. If they don't distribute it well, they will be stuck holding a super majority of something with far kess value than it could have had. Just my opinion.

That mechanism has been in Steem for quite a while, at least a few hardforks. But there's been no UI update to steemit.com to make use of it. Interestingly from what I've seen it's not a binary option but a variable to cap post rewards at an arbitrary number.

Currently all posts made with steemit.com are capped at a $1,000,000 post rewards. So limiting! :P

Ah yes, I remember seeing that number in steemd. I was thinking of the "Decline Voting Rights" feature.

Maybe vote with reduced power? A post with 400+ votes will still be visible regardless of payout I believe

I don't think votes count alone matters. The strength of the vote in terms of VESTS is what's important, as far as I know.

Some very powerful technology in there from which we all can gain. All for one and one for all! Namaste :)

@dantheman - I have some questions about the impact of this announcement on BitShares.

A) what is your guestimate of the effort to upgrade BitShares? (once you complete the upgrade for steem I'm sure your estimate would be more accurate)

B) Would this require a significant increase in RAM used by BitShares witness nodes for the existing transaction volume?

C) In your opinion should the BitShares community begin looking at upgrading to graphene 2.0 or should it wait until transaction volume dictate the need for it? BitShares has been amazingly stable for months now, so an upgrade of this magnitude has a strong probability of affecting that.

D) Aside from transaction throughput, are there any other compelling reasons an upgrade of BitShares to graphene 2.0 should be considered, and what degree of urgency would you assign to them?

As I have no doubt you're extremely busy, perhaps you could have Stan report the answers to these questions in this Friday's BBC mumble session.

Thanks for your extraordinary work, you really rock backend architecture!

Steemon!