[GUIDE] Optimise your RPC server for better performance

What is an RPC server?

An RPC server is a type of Steem server usually ran by witnesses like myself (@someguy123). They are used by third party applications such as MinnowBooster, voting bots, interfaces such as Busy and ChainBB, and AnonSteem.

They provide an API (Application Programming Interface) to the Steem network, allowing developers to create applications which read data from the Steem network such as posts or transfers, and to allow them to broadcast transactions such as votes and comments.

These servers are extremely expensive, as they currently require 512GB of RAM to operate, and growing every day. The majority are operated by TOP 20 witnesses due to their high costs of operation.

It can be difficult to find a 512GB server, but Privex Inc. sells them for just $600/mo (DISCLAIMER: I am the CEO of Privex), and accepts cryptocurrency such as Bitcoin, Litecoin, STEEM, and SBD.

What causes RPC servers to run slowly?

There are a mix of issues at hand:

steemdis single threaded while resyncing, and does not make good use of cores even after syncing. Single core performance is extremely important.- RPC nodes require a huge amount of RAM to operate at good speeds. Running on NVME or SSD will cause it to perform very poorly. RAM speed may influence the performance

- SSDs are necessary for the blockchain, if not NVME. RAID 0 is strongly recommended for increased performance

- Public nodes run into various networking problems from the high load they suffer.

Hardware

The first and foremost thing is to obtain good hardware. You want a CPU with good single core performance, rather than a CPU with 10s of cores. This cuts replay time and improves performance.

Storing the shared memory in RAM massively reduces replay time and improves stability. Alternatively you will want several NVME drives in RAID 0 dedicated to the shared memory file. Due to the heavy reads and writes, it may be advised to use a high performance filesystem such as XFS on the NVME drives, disable access times, and have it write the journal to a different disk (e.g. SATA SSDs).

Public RPC nodes can chew through massive amounts of bandwidth. The public PRIVEX load balancer (steemd.privex.io) has gone through 20 TB in just under 3 months. Network speeds of 300mbps+ are recommended (Privex sells 1gbps (1000mbps) servers), with at least 5TB bandwidth per month minimum.

Network Optimization

It's recommended to use NGINX in front of your RPC node, disable access logs and set up rate limiting as such:

# ----------------------

# nginx.conf

limit_req_zone $binary_remote_addr zone=ws:10m rate=1r/s;

# ----------------------

# sites-enabled/default.conf

limit_req zone=ws burst=5;

access_log off;

keepalive_timeout 65;

keepalive_requests 100000;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

This will restrict the rate at which individual users can send requests, preventing abuse.

Further network optimization

One problem is that some applications make excessive connections. This causes detrimental effects to performance.

To protect against this, you can use iptables (use iptables-persistent/netfilter-persistent to hold this on reboot) to restrict each IP to 10 connections at a time.

iptables -A INPUT -p tcp --syn --dport 443 -m connlimit --connlimit-above 10 --connlimit-mask 32 -j REJECT --reject-with tcp-reset

iptables -A INPUT -p tcp --syn --dport 80 -m connlimit --connlimit-above 10 --connlimit-mask 32 -j REJECT --reject-with tcp-reset

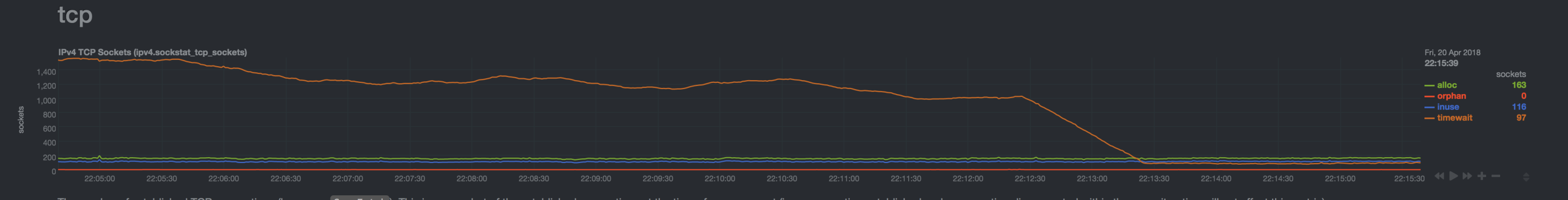

Notice the massive drop in connections from adding these iptables rules. This dramatically freed up connections and improved response times.

Another issue many RPC nodes face, is stale connections. This may be related to poor networking code within steemd or third party libraries for interfacing with Steem.

This can be resolved by tweaking the TIME_WAIT re-use, recycling and timeouts.

echo 30 > /proc/sys/net/ipv4/tcp_fin_timeout

echo 1 > /proc/sys/net/ipv4/tcp_tw_recycle

echo 1 > /proc/sys/net/ipv4/tcp_tw_reuse

To retain this on boot, place this in /etc/sysctl.conf (Taken from LinuxBrigade)

# Decrease TIME_WAIT seconds

net.ipv4.tcp_fin_timeout = 30

# Recycle and Reuse TIME_WAIT sockets faster

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_tw_reuse = 1

As can be seen above, tweaking these networking flags helped reduce TIME_WAIT connections massively, further cleaning up the connection pool and improving response times.

BEFORE network adjustments

curl -w "@curl-format.txt" -s --data '{"jsonrpc": "2.0", "method": "get_dynamic_global_properties", "params": [], "id": 1 }' https://direct.steemd.privex.io

time_namelookup: 0.067882

time_connect: 0.098762

time_appconnect: 0.173686

time_pretransfer: 0.173719

time_redirect: 0.000000

time_starttransfer: 0.469058

----------

time_total: 0.469133

AFTER network adjustments

curl -w "@curl-format.txt" -s --data '{"jsonrpc": "2.0", "method": "get_dynamic_global_properties", "params": [], "id": 1 }' https://direct.steemd.privex.io

time_namelookup: 0.004555

time_connect: 0.033890

time_appconnect: 0.105844

time_pretransfer: 0.105878

time_redirect: 0.000000

time_starttransfer: 0.137760

----------

time_total: 0.137781

Conclusion

As can be seen, the network fixes caused a four-fold improvement in response time. The Privex RPC server was ranging from 400ms to 600ms prior to the fixes applied. After enabling connection restrictions, and cleaning up TIME_WAITs, the response time was stable between 120ms to 150ms.

I hope that these tips will help you to improve your servers performance.

GIF Avatar by @stellabelle

Do you like what I'm doing for STEEM/Steemit?

Vote for me @someguy123 to be a witness - every vote counts.

Don't forget to follow me for more like this.

Have you ever thought about being a witness yourself? Join the witness channel. We're happy to guide you! Join in shaping the STEEM economy.

Are you looking for a new server provider? My company @privex offers highly-reliable and affordable dedicated and virtual servers for STEEM, LTC, and BTC! Check out our website at https://www.privex.io

Upvoting for the effort of making things better, but I can't agree with tips. Name lookup while being a part of a benchmark is crazy long and irrelevant. Nginx config is kind of generic use case optimization. We are using it for reverse proxies, so

sendfile onis irrelevant.Network level optimizations is what devices in front of your rpc node are used for.

That's my endpoint under load, but queried from a VIP zone. No TCP tweaking on a host. 128GB RAM with RAID0 NVMe

BTW, yeah, I know that my node's performance for general public is currently not as good as it used to be but I'm still trying to serve some high frequency requests to service providers (despite multiple notices of deprecation / making that endpoint obsolete).

I will switch endpoint somewhere in May to what I have currently under tests. Same hardware, new software, you will see the difference :-)

The name lookup part is strange yes, but it was staying between 400ms to 600ms regardless of that until I made the optimisations.

I found one of the most common reasons for RPCs being slow, was too many connections. I saw some IPs making 100s of connections regardless of the nginx rate limiting. I also saw an ungodly amount of TIME_WAIT (waiting to close) connections that were not being cleaned up.

I copied the

sendfile onpart from our Privex config, I was a little confused as to why that was there too, but I just left it there as it didn't seem to be hurting anything.If you take a look at the graphs, you can see the insane open connections that my RPC node, and the minnowsupport one were suffering from. This is partly due to asshats using

get_blockover HTTP rather than websockets, thus opening 100s of connections (of which by default linux takes 4 minutes to close... which is why time_wait optimisation is needed). This does slow down public RPC nodes due to the fact the network scheduler is having to deal with 2000 connections despite the fact less than 300 of those are actually active.Yeah... I was considering disabling

get_blockentirely and using separate, smaller and much more robust instance for that (pre-jussi times) but there were also troubles with vops. I'm planning for improvements for June, there's no point in wasting time for temporary solutions.Hi will graphql at least save those poor rpc nodes from too much request? maybe websocket + graphql? Even facebook are serving clients using graphql. It's way better than REST api.

Well, yes, using GraphQL would be a perfect way for interacting with various microservices, moreover it can live alongside standard REST routes.

Oh, by the way June turned out to be July or even August ;-( Time flies.

well yeah :) time flies. I learned a lot from coding.. Dude you should totally have graphql setted up :P So i can query :D I'm not technical enough to setup one. Do i need to be witness? I thought of relaying rpc nodes then to graphql server. But that's just redundant no? so better be a witness?

No need to be a witness to run your own API endpoints, however, due to the fact that witnesses are compensated for block production, they are expected to provide infrastructure / services for the community.

If you have a good idea for Steem related service that need to run it's own high performance API server I can help you with setting that up.

Well right now I'm having a quick fix by having all rpc nodes in json file. And rotate in case failure. Should be good for now. If I come up with other project or my app does scale. I'll remember your offer ;)

@someguy123 As a witnesses you have strong knowledgebase about stuff working behind the scene, so perhaps you have some preferences / advices aimed to API users?

I mean people using API do not worry about performance, but perhaps your hints (such as this quoted above) make a difference? Thinking about simple Do's and Do Not's list.

Agreed. For people using an api, what would be helpful?

Upvoted myself because those comments from voting bots are super annoying. Nobody is scrolling below that trash.

So what's the tl;dr?

hardware firewall in front to deal with network level hassle, nginx with ssl termination, jussi for caching, then specialized nodes; appbase+rocksdb, enterprise nvmes and 640kB should be enough for everybody ;-)

Soon in your blockchain (June, after my vacation)

Thanks. Unfortunately unable to upvote at this moment.

hello dear i liked you're posst please follo me

I actually just followed you, please follow me back.

follow me and I will follow you. that's all.

Unfollow me unfollow you?

oh, me :(

Oh my!

Oh d-d-dear!

Thanks for this, I just tweaked the time_wait params on rpc.steemviz.com and saw a nice boost in response times :)

Really helpful tips for the house keepers. I will sure support you as a witness for this act of selflessness. Thanks for the heads up

What you have done is important. RPC nodes greatly simplify Steem based apps!

Done pressing the witness button to you sir ,for a person like you and your activities in steemit world that shows big impact of many.. Thanks for your work and godbless.

I have often wonder why more applications have not been running there own dedicated server for their related service. With that kind of price tag and other issues of ram prices this paints a much better picture why.

Is there a reasoning why steemd is single threaded or do they simple have plans in the future to make it take advantage of multicore cpus? It seems rather odd from a layman like myself why with how many cores cpus are coming out these days would things still choose to be single core focused.

Thanks for including the disclosure of your relation with Privex.

Making multi-threaded applications is extremely difficult, even more so with things like replaying a blockchain since every block relies on the previous one to be correct.

Check this post for more info: https://www.quora.com/Why-is-multi-threading-so-damn-hard?share=1

I hope that the developers can improve this in some way. It's possible many of the issues are already solved in EOS, but may never make it to light in Steem.

Hello there @someguy123 thanks for sharing this. May I ask you some questions about being a witness?

Thanks for your time.

STEEM ON.

The specifications are getting more serious all the time, I am guessing fast quad channel memory would also be quite helpful.

I am curious, what is your opinion on the post from @steemitblog that it is not necessary to use a 512GB server for a full node. Specifically this part:

You can do it with less than 512 but your replay times will suffer and highly dependent on disk io speeds. I tried with raid 0 nvme for 3500mb/s and took 3.5 weeks to do a replay

As @themarkymark wrote, nobody believes @steemitblog's results.

I have never successfully gotten an NVME (without /dev/shm) server to replay without crashing. It is also slow as molasses.

At @privex we're experimenting with high quality NVME drives and locating CPUs with good single core performance, to try to make it more scalable. We think it may be possible to get half decent performance on a non-RAM node with 4 to 5 NVME drives in RAID 0, using XFS as the file system, storing the blockchain on a separate SSD, boot drive on a separate SSD, and various tweaks to XFS e.g. disable access time, move the journal onto the boot SSD so that it does not impact the NVME performance.

It is a lot more difficult than using RAM, but we're quickly approaching 512gb, and the next level can triple in price...

From their publication I had the impression that the scaling issues were not as severe as they have been portrayed in other blogs. At some point I considered setting up a full node but I realized that I need to learn a lot more and the cost is now beyond my budget. I appreciate that you took the time to respond.

I think he touched on it with this:

....stale connections can eat RAM too. Having more RAM than necessary is always ideal.