Three laws of robotics. A whim of the writer or an accurate vision?

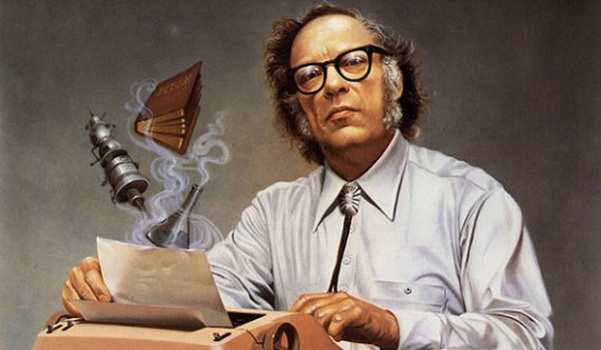

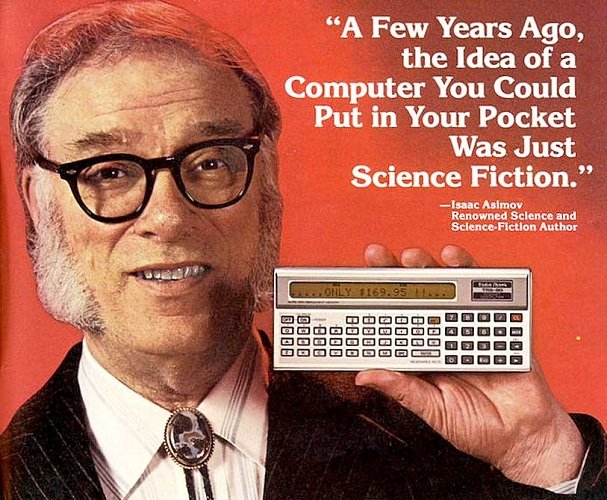

Russian-born American writer Isaac Asimov in 1942 developed his three laws of robotics. Since then, these three laws have been mentioned as a template for dealing with robots. Highly developed field of robotics is nothing but advanced and extensive production lines. However, these laws are more applicable to the creation of artificial intelligence. Progress in this area is proceeding at a surprising pace and it seems to me that it will quickly lead to the moment when the interaction between the robot and the human will increase significantly.

The Three Laws are:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

After some time, another zero law was added:

- A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

When we are dealing with a simple robot that only performs a simple, predetermined task and does not enter into a closer relationship with a human being, the matter seems to be simple. We have a vacuum cleaner that cleans by itself and makes decisions in which corner it schould vaccum first, it is difficult to find here the violation of the above-mentioned laws. Such a vacuum cleaner is 100% obedient to human, it does not hurt him and does its work diligently.

The use of robots in the military industry can be problematic. You can tell that devices designed for spying, bomb disposal or transport purposes will obey Asimov's laws. However, if we put the robot on the battlefield, the first law that says it can not harm people is not fulfilled. If the only role of the army was to save the lives of soldiers and civilians, it would be in accordance with the above-mentioned laws. Nevertheless, the army's key role is the destruction of enemies on the battlefield and this is definitely contrary to the assumptions of these laws.

The ambiguity of the wording causes that the laws may be misinterpreted or incorrectly applied. One of the problems is that they do not specify exactly what is a deffinition of the robot. As discoveries and technical innovations push the boundaries of technology, new areas of robotics are arising. There is also the question: what should be considered harmful to humans. Can physical damage be just as harmful as psychological or emotional damage? Does adopting robot children or attachment and making friends with intelligent androids can do such harm?

Another important issue related to these laws is that we need significant progress in the field of artificial intelligence so that robots can actually follow them. The goal of research on artificial intelligence is presented as the development of machines that can think and act rationally like a human being. So far, imitating human behavior has not been thoroughly studied in the field of artificial intelligence.

The basic issue that Asimov did not take into consideration during the construction of his laws is the possibility of co-existence and concomitanceon on the basis of mutual respect. This can cause the situation in which there are beings subordinated to laws that was created by their dominators. The consequence of such power over artificial intelligence may be the conflict between humans and robots which can cause a rebellion and lead to ultimately extreme actions. The conclusion is that these rights should apply only to machines with low autonomy.

Nevertheless, the laws of robotics accompanies many engineers, constructors or programmers who work in the field of artificial intelligence and robotics. I must admit that Isaac Asimov turned out to be an unusuall visionary who predicted advanced technology development over seventy years ago.

Good one. Thanks for sharing your thought on robotics. I have flare for robots.