Was this post WRITTEN BY MACHINE? New Harvard AI Can Recognize That

Inctroduction

I have recently read a not very favorable book review:

Tom Simonite does not keep it simple. He doesn't give you enough info on a subject to make the reading of the book enjoyable. He has over 400 pages of footnotes, so that is a way of getting your work for a subject out of the way. This book was so depressing to me, I can't even talk about it without feeling like I want to punch the kindle.

There would be nothing special and interesting about this review, except the one fact: it was written by Artificial Intelligence.

Nowadays, AI is able to generate a complex and elaborate texts, that can convince human that they were written by another human. This creates a dangerous potential of generating fake news, reviews and social accounts. It also poses a threat to the economy of Steem Blockchain, which I already mentioned in one of my previous articles.

Source

Live by the sword, die by the sword

What should we do to prevent such abuses then? It may sound ridiculous, but developing another AI is a decent solution.

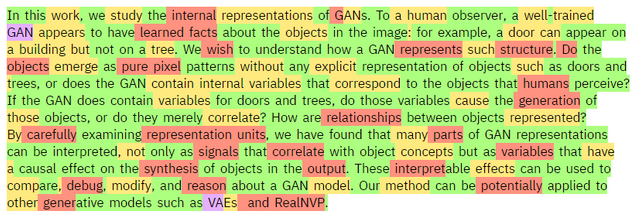

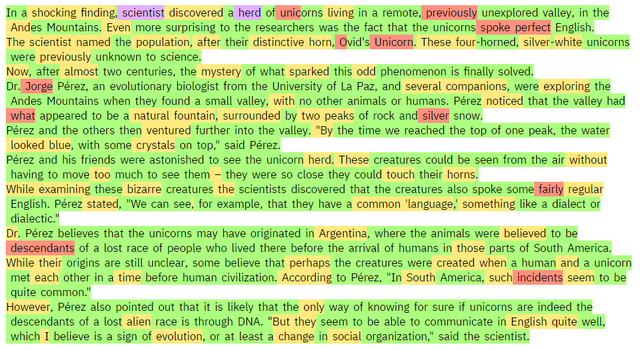

Researchers at Harvard University and the MIT IBM Watson AI Lab have developed a new AI tool called Giant Language model Test Room (GLTR), which is designed to detect patterns characteristic for machine-generated text.

When generating a text, AI usually depends on statistical data and chooses the most encountered and matching word, not focusing on a particular meaning. If the text contains many predictable words, it was most probably written by the machine.

GLTR evaluates each word and highlights it according to its probability. Green indicates the most predictable, purple the least ones.

In order to test the efficiency of GLTR, Harvard students were asked to determine whether the given texts were generated by machine or human. Without using the tool, they were able to properly define only half of texts. With the help of GLTR, their efficiency increased by 75%.

Out of curiosity, I tried to scan my own publications from Steem and this little experiment confirmed my belief that I am a human :)

Share your view

Do you think this is a correct way of fighting with the inappropriate implementation of Artificial Intelligence? I can't get rid of an impression that this is a kind of vicious circle leading to nowhere. Additionally, I think this is only a temporary solution, as in the future machine-generated text will be so diverse, creative and non-linear that it will be impossible to spot it by analyzing it statistically. Unfortunately, GLTR and similar algorithms have not many possibilities for further improvements.

Here you can test GLTR for free.

@memehub think this may interest you.

Posted using Partiko Android

Dude, this we totally help with anti-AI-abuse measures :) Imma look into their github

Do you have problems with AI abuses on your website @memehub?

Nah, I have a side project where I am working to build an AI to detect various types of abuse on steem and lighten the workload of antiabuse folks on steem.

Interesting idea @memehub, such project may be really useful considering current amount of abuse and spam on Steem

Hi @neavvy,

I wrote some stuff for a few clients covering AI and its application to story telling. One of the most fascinating ventures I found was by Disney. They sought to develop a streamlined AI that can search the Internet and discern between text that satisfies the criteria for a story. Some of the criteria used were plot, main and supporting characters.

As far as your question, I would say that the AI would tend to identify the most technically proficient, but least creative of English writers as AI. My rational is that AI programing being based on Websters and Oxford dictionaries. Logistical applications to defined language would yield such a result.

An interesting topic indeed and one that could one day have us all communicating through an AI-constructed Internet tollbooth.

God bless,

Marco

Thank you for your comment dear @machnbirdsparo. I really appreciate it a lot. I'm sorry for such a late reply, but I were on holidays and had very limited access to Internet.

Wow, I would never come up with such application of AI. I'm curious how efficient such solution was.

Indeed, that may be the case

At the time I investigated this for a client (year ago or so) there were issues, but the company was committed and did make some progress. I also saw it as a piece of the AI puzzle, not a foreseen stand alone venture.

interesting......... yeah. i think we need this in case some genius out there decides to create a business out of making essays, book reports or whatnots using AIs. I hope that this goes out in mainstream soon

Thank you for your comment dear @nurseanne84! I'm sorry for such a late reply, but I were on holidays and had very limited access to Internet.

Yeah, I'm curious if it will be a single genius or some company which would decide to get into this business.

In terms of text generation, there was the recent situation with GPT2 where the researchers held back part of their model for fear it was too good.

Detection is always an arms race though. If someone deploys an AI to detect generated text, then it can become a target for adversarial attacks. In situations where there is immediate reward for getting generated text through and limited long term consequences for being caught then an adversarial attack on a detector AI only needs to be relevant for a short term. I've posted about this before in regard to Steem. I've also mentioned in regard to AI dApp projects ... if your detector AI is running where the public has cheap access to it, then it will easily be overwhelmed by an adversarial attack.

One potential solution is to put the detector AI behind a paid API - a penny or two per detection request might be sufficient to raise the cost of adversarial probing to the point most attackers won't bother. There are other countermeasures an API could take but they are not fool proof.

As an aside, adversarial attacks aren't anything particularly special in AI. One approach to creating generators is to have it compete against a detector (the adversary) in an approach called GAN Generative Adversarial Network.

An an amusing annecdote: some years back a colleague and I annoyed somebody at a trade fair by adversarially attacking their face detection/tracking system. We managed to fool it with two circles and a line hand drawn on piece paper. Systems are much better now, but will still have vulnerabilities.

In the case of GPT2, or any general purpose language transformer, where the purpose is to just mimic human writing, I'm certain there are statistical solutions to find this type of text.

But if the text was created with (currently mythical) General AI, the text itself should be evaluated in terms of whether or not it is valuable. If it's valuable research, it doesn't matter if a person did the research or AI, again, assuming it was produced by a mind, not just a language transformer like these examples.

Thank you for you comment @inertia, that's really interesting attitude.

Well, I think that this "statistical" kind of AI also does its own research, which is not very far away from what we usually do - we are also some kind of neural network which usually creates its opinion basing on what others say or write. At the current level maybe it is closer to just transforming text of other people, but I think that as this concept evolves (not necessarily to the level of "mythical" General AI) it will be able to generate quality publication and present an unique opinion.

As long as you admit that particular text is machine-generated, I see nothing wrong in publishing and reading such text.

Yes. I think that's important. It's good to know the background of the author. If the author is reluctant to discuss their background, then they must be worried about their safety. For the time being, we can assume it's political pressure. But some day in the not too distant future, we will have to consider that an anonymous, unverified author might be on the wrong side of politics, is AI, or both.

That's right, unfortunately.

That's one of the reason's I'm publishing my autobiography online, daily, all over the place.

Thank you for sharing your opinion @eturnerx. I appreciate it a lot :)

I totally agree with you, but I suppose GLTR is rather an experiment than a serious tool. As I mentioned, it only increases a chance to spot the fake text, and I believe that in about 6-12 months machine-generated texts will be so advanced that GLTR will become helpless. And unfortunately I see no space for further development and improvements of this tool.

That's really interesting. As far as I know, till the premiere of iPhone X face recognition systems were generally easy to trick with a photo. Ultimately Apple introduced their special sensor able to recognize face in 3 dimensions, thus becoming resistant to the images. However I think you can still convince it by using some precise mask.

I think it's an arms race. While GLTR might not be the best detector in future, something else will be.

I agree, but the concept of this tool will have to be reworked.

Hi @neavvy,

Thanks for the memo and sending me the link of this invaluable A..rt..I..cle. First things first. @NEAVVY, this article is too HEAVVY for me to comment, but this article aroused much more interest. After studying deeply the Basic Concept, i will surely give my comment bcoz my mind has developed that much interest in this topic albeit lately. Really sorry about that. Will catch u soon with a strong comment.

Haha :) No problem @marvyinnovation, I really appreciate your efforts!

Ps. Did you give up writing articles on Steem? I've noticed there hasn't been any new post on your account since two months.

No @Neavvy, not like that. I wrote a lot in Steemit, but none noticed it except @crypto.piotr and @nathanmars7. These two gentlemen are too nice to support me when i needed it, but for others STUFF is a must. I don't have that sorts of STUFF to attract others while writing a content. that is my main drawback and i didn't get discouraged and stopped writing as a result of it. i will surely write if i encounter any interesting topic.

BYE FOR NOW, @neavvy.

hi @marvyinnovation

I'm glad to be called gentelmen :) I'm also sending you some email. Please check it out.

Yours

Piotr

Oh, okay, I get it @marvyinnovation

Extremely happy that this is finally here. Thank you very much for sending the memo. I immediately upvoted and resteemed. There is too much reverence and awe directed at AI which I think for a large part come from the fiction we have created (many which are more science fantasy than sci-fi)

Funny thing is that many of the Machine Generated texts sound a lot articles from Buzzfeed, Huffpost and other similar mass media that massively helped to usher the click baits and 21st century yellow journalism. You might find this interesting: https://forge.medium.com/the-urgent-case-for-boredom-8dd92a891754 The reason I link this is that I see it as a solution to many of the problems regarding the advent of AI. Just relax, let go and stop engaging with them. I'm a libertarian at heart and I greatly connect with the concept of Wu Wei: http://www.myrkothum.com/wu-wei

Just stick with what matters to you the most and live life based on principles. Don'care much about random stuff you see or hear online o in real life.

PS: Add the #stem tag to the post :-)

Thanks for the link on wu wei, @vimukthi. It reminds me of the zen saying, "Sitting quietly doing nothing, spring comes and the grass grows by itself."

https://www.dailyzen.com has been a great source of such quotes for me. www.gardendigest.com/zen/quotes.htm had a valuable collection too.

Thanks for the links. Will check them out.

These sites are really great inspirational place @vimukthi, thanks for sharing!

stemgeeks picks up posts tagged with science and technology, and a couple more :)

I didn't know this dApp, but they seems really cool @abh12345.stem :)

Thank you for your comment dear @vimukthi! I'm sorry for such a late reply, but I were on holidays and had very limited access to Internet.

Someone in the comments under this post even said that most journalist agencies like Reuter's use AI to generate their texts.

Maybe that's indeed the greatest attitude.

Okay, I'm gonna add it in the future :)

AI has progressed to such a extent that is would be impossible for us to compete with the AI. We won't be able to fight against it. Only AI would be able to counter fight AI. We have no choice but to develop AI in order to fight against it. Ultimately, we would become unnecessary for the survival of AI.

Posted using Partiko Android

Thank you for your comment dear @akdx! I'm sorry for such a late reply, but I were on holidays and had very limited access to Internet.

Indeed, that's already the case.

Exactly.

I do not worry too much about machine generated text. I mostly work on steem and don't think there is too much of it here. The comments I get on my posts are from humans as far as I know.

I am in some fb groups for a few controversial subjects. It's pretty obvious when paid opposition shows up. I do not know if the words are machine generated, but based on how they read, the "real" people see through them quickly.

I think my kids read lots of AI generated texts through websites like buzzfeed and such.

I was wondering how they could possibly come up with so much content, and so many quizzes and such, until I realized it was all probably done by AI.

I am happy about it as I think its better for them to be employing AI than to be having mindless journalists but its still scary cause my kids is sucking up all that information!

Wow, that's a brave theory @metzli! Don't you think that they just hire an army of cheap journalists?

It’s probably a combination.

The other day my kid was working on a “never ending Harry Potter quiz” there is no way a person wrote that (I hope).

Posted using Partiko iOS

Exactly.

Thank you for your comment dear @fitinfun! I'm sorry for such a late reply, but I were on holidays and had very limited access to Internet.

That's right, but we need to consider the fact that AI generated text is currently on a very basic level. Additionally, such programs are usually not open source (people do not have access to the code), so you would need to be really advanced at machine learning (probably on an academic level) to develop something like that on your own. But when this technology gets more adoption and programmers with basics skills will be able to use it, they may use it in a wrong way

Dear @neavvy

After reading your publication and some comments.

To my mind only came the memory of the first Terminator film, if everyone remembers the end is the beginning of all the destruction of humanity because what remained after destroying Terminator was his arm and analogy of reality with what you say is that we humans write with our hands and machines with special equipment for that fact...

But that mechanical arm that contained everything that began is if you want a way of saying with the hand that writes man the machine with his cybernetic hand wrote the future.

Thank you for your comment dear @lanzjoseg I really appreciate it a lot. I'm sorry for such a late reply, but I were on holidays and had very limited access to Internet.

Yeah, this analogy is really on point.

First of, I am blown away by how far we've come whether to be used in a good or bad way. This is what technology has done and it is unimaginable what else we can do in the future. I don't know which is more amazing, the creator of these AI generated things or the counter creators. I think this works best for English language, no?

I think there is still limit to anything AI as much as potential.

"I think this works best for English language, no?"

Interesting point. I'd venture a guess that it's largely independent of the particular language. I'd think if you trained it with a made up language it would still work too. I'd bet it was tested mostly in English however, and that the juiciest cherries picked were made in English.

All wild speculation on my part though ;)

I tried Filipino language and it tries to group letters that makes sense in English. It would probably take a longer time to be used for other languages.

Posted using Partiko Android

Of course. I was thinking of the general case. Like I'm sure this one was made in English, but I thought the 'this' in "I think this works best for English language, no?" was the technique not this particular implementation.

All fascinating stuff. I think this leads up to AI that not only try to fool humans, but also other AI into thinking they're human.

Thank you for your comment dear @leeart. I really appreciate it a lot. I'm sorry for such a late reply, but I were on holidays and had very limited access to Internet.

Haha, I think they both had to put enormous efforts to achieve such effect.