The Evolution of AI Tongue Diagnosis: From Algorithm Kindergarten to Top-Tier Hospitals

Imagine AI as a bunch of toddlers just learning to walk—tongue diagnosis would be their first “medical trick” picked up in kindergarten. Back in the early days, these baby AIs couldn’t even tell a tongue from a nose. Show them a blurry tongue pic, and they’d stare with their data eyes, asking, “What’s this? It’s red—strawberry?” Don’t laugh—when a patient opened their mouth, revealing a tongue coated like a cream cake, the AI would crash: “This isn’t in my library!”

Kindergarten: What’s a Tongue—Can You Eat It?

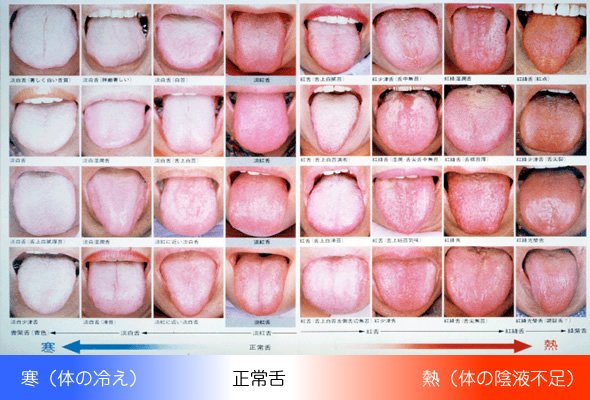

At the start, AI tongue diagnosis was basically a “picture book game.” Experts fed it a few hundred tongue images, labeled “yin deficiency” or “damp heat,” and it thought it was a TCM master. But in reality? Its diagnoses were like rolling dice. Patients got a “tongue coating report” and scratched their heads: “Do I need herbs or ice cream?” Still, these algorithm toddlers were stubborn. Scientists threw in more data—thousands of images—and sprinkled some convolutional neural network “magic.” Soon, it could spot tongue colors and coating thickness. By kindergarten graduation, it bragged, “I know you ate spicy noodles yesterday!”—miles from a real TCM doc, but at least it stopped mistaking tongues for strawberries.

Elementary School: Tongue Diagnosis Grows Up

In “elementary school,” AI tongue diagnosis started shaping up. Thanks to deep learning, it went beyond surface-level looks, spotting cracks and tooth marks on tongues. Big data came to the rescue too—thousands of cases and tongue images stuffed into its “backpack” for it to figure out patterns. Now, it wasn’t just saying “your coating’s thick”—it mimicked old TCM docs: “Hmm, pale red tongue, thin white coating, pulse… oh wait, I can’t check pulses yet.” It slipped up sometimes, but it could toss out decent tips like “cut the greasy stuff, drink more water.” Patients went from “what’s this nonsense” to “hey, makes sense.”

Middle School: AI Wants to Be the Star

By “middle school,” AI tongue diagnosis got cocky. With multimodal analysis, it didn’t just look at tongues—it combined symptoms, temperature, and patient stories for a “full diagnosis.” No longer content as a sidekick, it wanted to write prescriptions: “Yellow, greasy coating—damp heat—here’s some Huanglian detox soup!” TCM experts chuckled: its accuracy was climbing, impressing even veterans, but it was still a reckless teen. A tongue stained red from spicy food? It’d crash, once calling a hotpot tongue “liver fire” and nearly sentencing a patient to bitter herbs.

Top-Tier Hospitals: Coming of Age

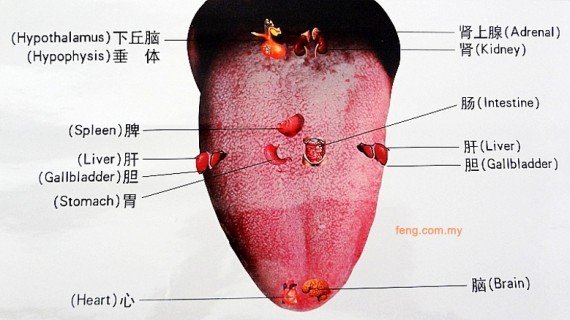

Finally, AI tongue diagnosis “grew up” and stepped onto the stage of top-tier hospitals. Now, it pulls hundreds of features from tongue images, pairing them with genes and lifestyle data for pinpoint diagnoses. Coating thickness, tongue color, shape—it sees it all, even predicting diseases years out. Better yet, it’s a team player, syncing with CT scans, blood tests, and Western diagnostics to form a “super medical squad.” TCM docs now treat it as a trusty aide—who’d say no to an intern that never sleeps and pulls up 100,000 cases on demand?

Sure, it’s not flawless. A spice-lover’s tongue might get flagged as “overheated,” and rare cases can stump it. But isn’t that its charm? From kindergarten stumbles to hospital strides, AI tongue diagnosis has grown through falls and risen strong.

Future: Will Tongue AI Fly?

Picture this: future AI tongue diagnosis as a tiny flying robot. It zips over, says “open wide,” scans your tongue in three seconds, and hands you a health report: “Stop staying up—your liver’s crying.” It might even chat with your smart fridge: “No more spicy snacks, he’s got damp heat!” From algorithm kindergarten to top-tier hospitals, AI tongue diagnosis keeps evolving. It’ll never replace the warmth of a TCM doc’s “look, listen, ask, feel,” but it’s breathing new life into this ancient art in the digital age. Isn’t that a quirky kind of legacy?