The Paradigm of Social Dispersed Computing and the Utility of Agoras

Social Dispersed Computing

What is socially dispersed computing? It is an edge oriented computing paradigm which goes beyond cloud and fog computing. To understand socially dispersed computing we first have to discuss dispersed computing and how it differs from the previous paradigm of cloud and fog computing. The current trend toward decentralized networks which we first saw with the peer to peer technologies such as Napster, Limewire, Bittorrent, and later with Bitcoin, have brought to us an opportunity to conceptually new paradigms. The original model most people are familiar with is the client server model which was very much limited in that the server was always vulnerable to DDOS attack. The client server model has never been and could likely never be censorship resistant.

In the client server model the server could simply shut down as was the case with Bitconnect or it could be raided. The server could also be shut down by hackers who simply flood the site with requests. As we can see from the problems the client server model presented we discovered the utility of the peer to peer model. The peer to peer model was all about censorship resistance and promoted a network which was to have no single point of failure (single point of attack) which could be result in the shutdown of access points to the information. One of the first applications for these peer to peer networks was file sharing networks and networks such as Freenet/Tor etc. This of course eventually evolved into the Bitcoin which ultimately led to the development of Steem.

In dispersed computing a concept is introduced called "Networked Computation Points". An NCP can execute a function in support of user applications. To elaborate further I'll offer something below.

Consider that every component in a network is a node. Now consider that every component node is an NCP in that it can execute some function to support some user application. If we think of for example a blockchain then we know mining would fit into this category because it is both a node in the network and it also can execute a function in support of Bitcoin transactions. Why is any of this important? Parallelism is something we can gain from dispersed computing and please note that it is distinct form concurrent computing. When we rely on parallelism we can reap the benefits in terms of performance when executing code by breaking it up into many small tasks which can be performed across many CPUs.

EOS attempts to leverage parallelism specifically to enable it's performance boost. The benefit is speed and flexibility. Think for example of the hardware side also with FGPAs which can do similar tasks of a microprocessor. FGPAs (not ASICs) which unlike ASICs would provide generalized flexible parallel computing. Consider that just like with mining a company could add more and more FGPAs to scale an application as needed.

To understand Social Dispersed Computing we have to make note of the fact that there are other users at any given time. For example the other users in the network participate to provide resources to the network for the benefit of other users whilst using the network. So in Steem for example as you add content to Steem you are adding value to Steem in a direct way, but also in a dynamic way. The resources on Steem also can adapt dynamically to the demand provided that the incentive mechanism (Resource Credits) works as intended.

EOS as an example DOSC (Dispersed Operating System Computer)

Because EOS seems to be the first to approach this holistically I will give credit to the EOS network for pioneering dispersed computing in the crypto space. All resources are representable by tokenization in a dispersed computing network. EOS and even Steem have this. Steem has it in the form of "Resource Credits" which represent the available resources on the Steem network. If more resources are needed then theoretically the resource credits could act as an incentive to provide these resources to the Steem network. This provides a permanent price floor to Steem represented as the amount of Steem which would have to be purchased in order to have enough resources to run Steem (if I have the correct theoretical understanding). This would put Steem on a trajectory toward dispersed computing.

Operating systems typically sit between the hardware and software as a sort of abstraction layer. This traditionally has been valuable because programmers don't have to directly speak to the hardware and hardware designers don't have to directly communicate by their designs to the programmer. In essence the operating system in the traditional model is centralized and made by a company such as Microsoft or Apple. This centralized operating system typically runs on a device or set of devices and provides some standard services such as email, a web browser, and maybe even a Bitcoin wallet.

Typically the most valuable or high utility software people consider on a computer is the operating system. In our smart phones this is Android OS and in PCs it may be Windows or Linux. This is of course thrown on it's head under the new paradigm of dispersed computing and the new conceptual model of the "decentralized" operating system. EOS is the first to attempt a decentralized operating system using current blockchain technology but the upcoming technology easily eclipses what EOS could do. Tauchain is a technology which if successful will leave EOS in the stone age in terms of what it will be able to do. EOS while ambitious also has had it's problems with regard to the voting mechanisms and the ease at which collusion can take place.

To better understand how decentralized operating systems emerge learn about:

- The ExoKernel Exokernels : An Operating System Architecture for Application Level Resource Management

- OSKit

If we look at OSKit we see that it is the tools necessary for operating system development. If we look at Tauchain we realize that it is strategically the most important tool for the development of a decentralized operating system being provided in the form of TML (a partial evaluator). If we think of the primary tool necessary to develop from we have to initially start with a compiler. A compiler generator is more like what TML allows with it's partial evaluator. More specifically it is the feature of Futamura projection which can provide the ability to generate compilers.

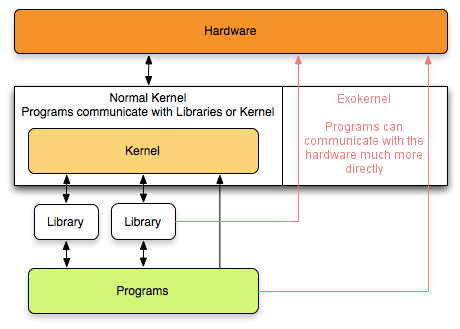

If we look at the next most important part of an operating system it is typically the kernel. Let's have a look at what an exokernel is:

Operating systems generally present hardware resources to applications through high-level abstractions such as (virtual) file systems. The idea behind exokernels is to force as few abstractions as possible on application developers, enabling them to make as many decisions as possible about hardware abstractions.[2] Exokernels are tiny, since functionality is limited to ensuring protection and multiplexing of resources, which is considerably simpler than conventional microkernels' implementation of message passing and monolithic kernels' implementation of high-level abstractions.

By Thorben Bochenek [CC BY-SA 3.0 (https://creativecommons.org/licenses/by-sa/3.0)], via Wikimedia Commons

From this at minimum we can see that an exokernel is a more efficient and direct way for programmers to communicate with hardware. To be more specific, "programs" communicate with hardware directly by way of an exokernel. We know the most basic function of a kernel in an operating system is the management of resources. We know in a decentralized context that tokenization allows for incentives for management of resources. When we combine them we get kernel+tokenization to produce an elementary foundation of an operating system. In a distributed context we could apply a decentralized operating system in such a way that the network could be treated as a unified computer.

Abstraction is still important by the way. In an operating system we know the object oriented way of abstraction. Typically the programmer works with the concept of objects. In an "Application Operating Environment" an "Application Object" can be another useful abstraction. Abstraction can of course be taken further but that is for another blog post.

The Utility of Agoras

Agoras+TML is interesting. Agoras is the resource management component of what may evolve into the Tau Operating System. This Tau Operating System or TOS is something which would be vastly superior to EOS or anything else out there because of the unique abilities of Agoras. The main abilities have been announced on the website such as the knowledge exchange (knowledge market) where humans and machines alike can contribute knowledge to the network in exchange for the token reward. We also know that Agoras will have a more direct resource contribution incentive property in the form of the AGRS token so as to facilitate the sale or trade of storage, bandwidth or computation resources.

The possible (likely?) emergence of the Tau Operating System

In order for Tauchain to evolve into a Dispersed Operating System Computer it will need an equivalent to a kernel. Some means of allowing whomever is responsible for the Tauchain network to control and manage the resources of that network. If for example the users decide then by way of discussion there would be a formal specification or model of a future iteration of the Tauchain network. This according to current documents is what would produce the requirements for the Beta version of the network to apply program synthesis. Program synthesis in essence could result in a kernel and from there the components of a Tau Operating System could be synthesized in the same way. Just remember that all that I write is purely speculative as we have no way to predict with certainty the direction the community will take during the alpha.

References

- García-Valls, M., Dubey, A., & Botti, V. (2018). Introducing the new paradigm of Social Dispersed Computing: Applications, Technologies and Challenges. Journal of Systems Architecture.

- Karne, R. K., Jaganathan, K. V., Rosa Jr, N., & Ahmed, T. (2005, October). DOSC: dispersed operating system computing. In Companion to the 20th annual ACM SIGPLAN conference on Object-oriented programming, systems, languages, and applications (pp. 55-62). ACM.

Web:

https://www.darpa.mil/program/dispersed-computing

https://www.usenix.org/conference/hotos15/workshop-program/presentation/vasilakis

Every network is computer. And each computer is a network. ''That's all that an ordinary computer does -- move bits from place to place.'' - Seth Lloyd. Can't escape spatiality. It underlies each and every possible architecture scaling, no matter if one jams all nowaday computation power in a grain of salt or disperces it over interstellar distances. spacetime tradeoff.