The Case of (Stafford) Beer

(cross-posted from my Substack)

The Case of (Stafford) Beer

Thoughts after reading “Brain of the Firm”

The 1970s-era “management cybernetics” work of Stafford Beer has been getting some buzz lately. I’ve been curious about this from a perspective of what it may have to say about bureaucracy and the effectiveness of our government systems, but the high-level description of “Viable Systems Theory”, where any viable system is alleged to have certain properties, seemed like it might be applicable to thinking about how games work and some of the other big ideas I’ve been pondering. So I decided to get a copy of Brain of the Firm from a library[1] and see what it was all about.

Overall Impressions

I have mixed feelings about the book. The core premise, where he suggests a model of management based on the human nervous system, seems hard to follow and harder to implement. But along the way, often in asides or throwaway comments, he touches on ideas that seem really compelling. The whole thing is written in a somewhat guru-ish manner, longer on assertions of what is clear to him based on his expertise than it is on demonstrations of how things work in practice. As I was reading I thought of the old saying “If you can’t dazzle them with brilliance then baffle them with bullshit” and wondered if that was operating – there were things I know I didn’t absorb, but I’m not sure if it’s because I wasn’t up to the task of grasping them in the form presented or if the ideas in those parts weren’t solid enough to be grasped. (And I took electrical engineering courses in college, so I think I’m more likely than the average person to be able to follow ideas related to Control Theory).

On Language

Beer views his field as “cybernetics”, which to most people today means “computers” or even mechanical/biological cyborgs, but which was originally just supposed to be about how things are controlled (based on a Greek term for steering a ship). The idea is that control mechanisms like thermostats or the electronics in a CNC milling machine are specific instances of the broad category that also includes things like how organisms control their internal processes or how businesses or governments control theirs, and “cybernetics” is the term for the scientific study of this category of things. This seems to me like it was a branding effort that failed – some people wanted to stake a claim to expertise over the topic by asserting that their preferred neologism was the correct one to use, but it didn’t really catch on so they ended up putting themselves in a discourse bubble.

I found Beer’s writing to be somewhat frustrating in regard to language choice. I can forgive an occasional flourish like introducing “anastomotic reticulum” as a general term for the complex branching-and-recombining network of connections between input and output in organic decision-making, but in other places he’s also frustratingly bland such as when he names the various parts of his model System 1, System 2, System 3, System 4, and System 5, or refers to ideas he presented via diagram or illustration by figure number, as if seeing some boxes with arrows between them to show how System 4 works would sear the term “Figure 25” into your mind for future reference.

What’s the big idea?

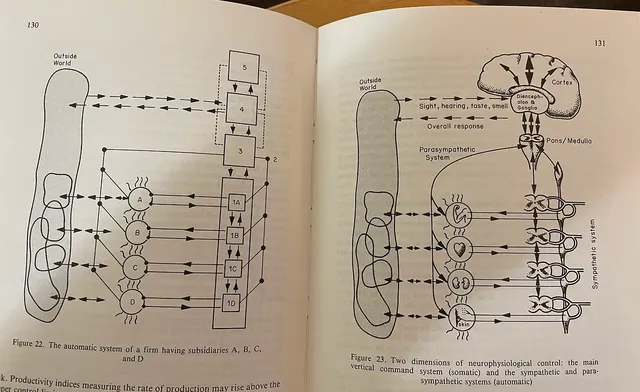

The core argument of the book is that any viable system, from a cell to an animal to a human institution, has certain common properties, and that it’s therefore useful to understand them by analogizing to a well-known viable system and the control system that operates it: the human nervous system. He breaks this down to five systems, the lowest level of which (System 1) is a recursive instance of its own viable system – in a human this might be organs like the heart or lungs (which have their own substructures) or in a business might be the divisions the next level down in the hierarchy.

At the level of metaphor this seems interesting. Translating it into practice seems a lot trickier. There are a lot of places where he seems to be to handwave away any “but how do you actually do that?” questions by saying that good Operational Research will be able to identify the particulars in any given situation. I think he’s also a bit inconsistent in whether this is a descriptive or prescriptive model: on one hand he claims that any viable system already works like this, if merely informally, so it’s simply helpful to map particular systems to this model to help understand things better. On the other hand he seems to view the efforts he participated in to organize the Chilean economy along the lines of this model to be truly revolutionary. Now it’s not completely paradoxical for something to be both a description of how things already work and a revolutionary change (you could argue that’s what Quantum Mechanics did with physics), but the section of the book that discusses his Chilean experience seems to be pretty thin on nuts-and-bolts demonstrations of ways that organizing analysis at the level of his five systems provided valuable insight or improvements and you’d think that’s the kind of thing he would mention in the book if he had a lot of examples. In practice it seems like the nationwide communication system his team set up to work as “System 3” was useful, but wouldn’t that be useful for almost any system?

My thoughts about the big idea

When presenting the idea of the recursive nature of the system, Beer talks about the different levels speaking different meta-languages. And to the viable “system 1” at the bottom of the hierarchy, it isn’t experiencing the higher levels as something that comes in directly through its own big-decision-making System 5, but as part of its environment. Beer doesn’t use these words, but the idea that has crystallized for me is that the brain doesn’t talk to the heart about what’s happening in terms of current muscle tension and whether valves are open or closed, there are things that are and should be internal to the heart, and the heart/brain interaction isn’t (directly) about those things. That suggests to me that the thing “at the top” of a healthy hierarchical structure shouldn’t imagine it can zoom down to the lowest levels, bypassing or cutting out the things that are already “at the top” of those substructures. And with regard to the meta-language issue, Beer cautions against thinking there will be a lingua franca between everything, and that this can lead to warped analysis (especially since “money” will tend to suggest itself as the best lingua franca, so you can end up with bad situations like a department over-focusing on cost-cutting and degrading its capacity to deliver on results other departments need if that’s the only money-oriented lever they have).

Personally, I wonder if basing the model around a human nervous system presented some intellectual shortcuts. Since humans already have “the parts” that can do these things, ideas like “we can have people in a room with lots of data and a good UI” can seem like a good solution for how you build a System 5 that does what a System 5 needs to do. Maybe it would have been useful to think about how the systems map to some other “viable systems” that it ought to work for, such as a tree. Without the option of saying “a human can do that” it may have been helpful in sketching out what the systems do and how they work. Humans are simultaneously the thing we’re all most familiar with and the things that are most mysterious to us, so it may not be good to use as the sole example. There’s also an issue that the model implies that Systems 2 through 5 should not be independently viable, so it may be a problem to have a system where they may be, or at least be seen to be (e.g. having System 5 be synonymous with “El Presidente”). Some things he said in the post-Chile section of the book suggest he may have explored some of these themes in later works.

Maybe the real treasure was what we picked up along the way?

I’m not sure how much the main model is actually useful, but there were some things mentioned in the course of the book that seemed like they could be the tip of important icebergs. For example, when discussing his “algenode” (a mostly-hypothetical electro-mechanical device that’s basically a random variable that has buttons to “reward” or “punish” its behavior to shift the weighting of outputs that he uses to illustrate some concepts):

Meanwhile, let us return to the adaptive machine. We have already seen how the probability transfer function is changed by [reward/punishment] feedback so that one bulb lights more regularly than the other. If the environment of this system, which is its next senior hierarchic level, keeps changing its mind about the utility associated with the red and green outcomes, then the machine will follow these changes. But if we take the limiting case, when the environment settles for red, the machine eventually adapts completely to red – because all ten of its contents are [set to output the same color]. This is the analogue of overspecialization in a biological evolutionary situation. The system is so well, so very thoroughly, adapted to its environment that if this should suddenly and grossly change the system would have lost the flexibility required for adaptation. We can stop rewarding our machine and try to punish it, but the slide has rusted in.

This state of affairs illuminates the need for a constant flirtation with (what we usually call) error in any learning, adapting, evolutionary system. In the experimental version of the machine, the one I actually built, two of the ten contacts by-passed the transfer function – one always lit green, and one always lit red. Thus, in a fully adapted red outcome, the machine still made mistakes for 10 per cent of the time by exhibiting a green light. ... The vital point is that mutations in the outcome should always be allowed. Error, controlled to a reasonable level, is not the absolute enemy we have been taught to think it. On the contrary, it is a precondition for survival. Immediately the environment changes and begins to favor the green outcome, there is a chance-generated green result to reinforce, and the whole movement towards fresh adaptation begins too. The flirtation with error keeps the algedonic feedbacks toned up and ready to recognize the need for change.

The idea that a learning process will have a tendency to learn itself into being stuck unless provided with enough variety seemed like a pretty deep idea, and resonated with some things I’ve been pondering myself, both on a personal level as well as in politics and society.

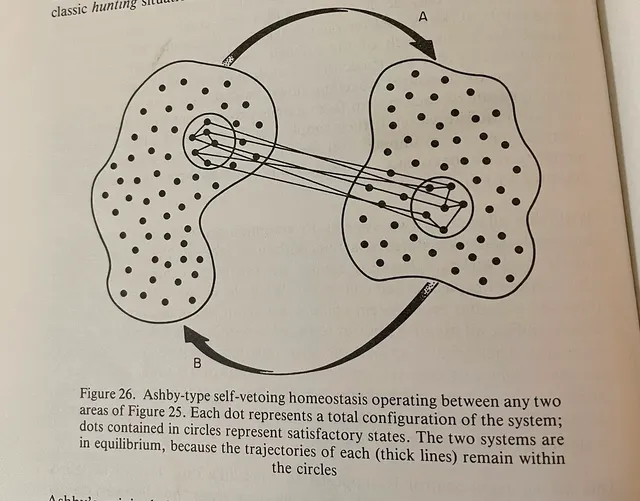

In another section of the book, Beer discusses a model of two systems acting as mutual controllers. If they’re viable systems, like living organisms, they’re trying to maintain their internal state in homeostasis, since that will generally involve lots of variables that’s a big multi-dimensional space. Since we’re not good at visualizing huge multi-dimensional spaces, we can visualize a 2D space, where some combinations of variables are in the region of homeostasis and some are outside, and we can imagine changes over time as tracing “trajectories” through that space. That “are you in homeostasis?” aspect can be thought of as a metavariable, the system doesn’t have to have any specific thing that corresponds to “being OK” or “alive-ness”, there’s a complicated interaction between lots of variables. And there’s also a zone around those stable islands where the system knows how to get back (think of being underwater: how distressing that is to an air-breathing creature like you probably depends on whether you believe you’re capable of swimming out of it). Thinking of this “distance from homeostasis” variable as a conceptual way to measure wellbeing seemed really interesting to me, it would be nonlinear and asymmetric and maybe not as amenable to naive extrapolation or weird big-numbers analyses the way that the presumably simple function in Utilitarianism seems to be. And thinking about learning new strategies being the way the zones expand seemed useful as well.

Information Theory

A lot of Beer’s ideas relate to Information Theory. In contrast to the conventional take on the topic, which is built on Claude Shannon’s work in communications, Beer’s school tends to talk about “Variety” rather than “Shannon Entropy”. They seem to be mathematically equivalent, but talking in terms of increasing or decreasing variety does seem useful. For example, describing a decision-making process as reducing the variety of options under consideration until you’re left with the one thing you actually do seems more natural than saying you’re reducing the entropy.

A key factor in Beer’s thinking seems to be Ashby’s Law of Requisite Variety: the idea that a controller needs at least as much variety as the thing it’s controlling to maintain stability. For example, a thermostat that controls both a heater and air conditioner needs at least three states (too hot, too cold, just right), if it only has two the best it could do would be to rapidly oscillate back and forth between heating and cooling. When complex things like humans are involved there can be game-theory-like strategies, Beer usually talks in terms of amplifying or attenuating variety. For example a party in a negotiation may “increase variety” by backing away from principles they’ve previously espoused in order to avoid having too few options which could be exploited by the counterparty.

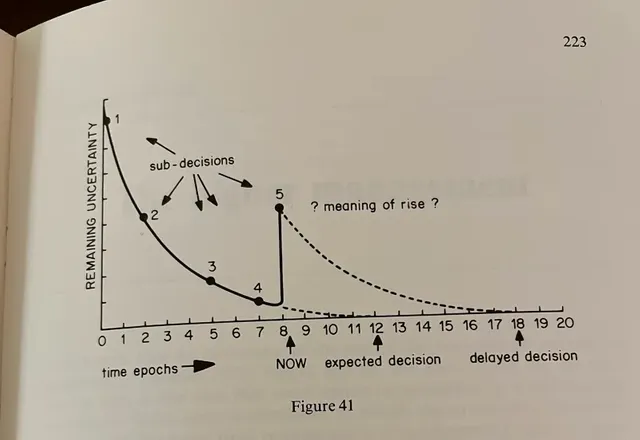

I’m not sure I completely buy everything he’s saying (I can see how you can attenuate variety by ignoring information, but I’m not sure you can genuinely amplify it, maybe only create the appearance of doing so), but I’m intrigued by some of it. In one section he uses some hard-to-follow algebraic notation to describe the process of making a business decision. It’s built around a vague semi-example, where he’s talking about figuring out a new product based on different production, distribution, and marketing decisions. If some particular factor constrains one dimension of the decision (say, something about the machines available makes one production choice clearly preferable) that has consequences for other factors (which factory you use to produce the thing will impact which distribution methods make sense, etc.). The process of having the mutual constraints shuffle through eventually reduces your variety to 1, i.e. you’ve made the decision about what to do. This seemed like it might be a useful way to think about quantification of agency and decision-making, which is something I’ve felt ought to be useful to game analysis. For example, whether a player feels like they’re converging on a decision or are stuck in too-many-options analysis paralysis may have something to do with the rate at which they’re “reducing variety”.

Final Thoughts

I think there are some valuable things in it, but unless you’ve got a lot of time and energy or find Beer’s writing more digestible than I did I don’t think I can recommend actually reading the book. I personally am torn on whether to try to go back to the sections I bounced off of, and/or read Beer’s companion volume The Heart of Enterprise where he may present his viable system ideas in a different context. I think I’m glad I’ve been exposed to these ideas, but I wish it hadn’t taken so much work to get there. And the experience of going to an academic library and checking out a physical book turned out to be a fun little quest, so that was another positive for me personally, but that’s not really about the content of the book.

[1] I didn’t know this until recently, but any resident of Oregon can register to check things out of the University of Oregon library. Since I live in Eugene, Oregon and can get to the UofO campus without too much effort this seems like a great program.

Your post has been supported by THE PROFESSIONAL TEAM. We support quality posts, quality comments anywhere, and any tags