Hallucina-Gen - Spot where your LLM might make mistakes on documents

Hallucina-Gen

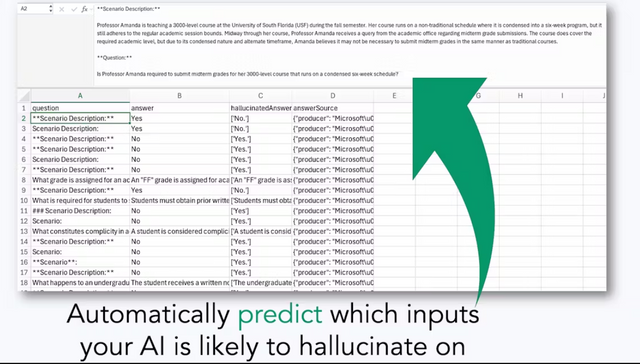

Spot where your LLM might make mistakes on documents

Screenshots

Hunter's comment

Using LLMs to summarize or answer questions from documents? We auto-analyze your PDFs and prompts, and produce test inputs likely to trigger hallucinations. Built for AI developers to validate outputs, test prompts, and squash hallucinations early.

Link

https://hallucina-gen.actualization.ai/

This is posted on Steemhunt - A place where you can dig products and earn STEEM.

View on Steemhunt.com

Congratulations!

We have upvoted your post for your contribution within our community.

Thanks again and look forward to seeing your next hunt!

Want to chat? Join us on: