I'm not sure it's a good idea to joke about this when you're the CEO of the world's leading AI company.

For example, if China were convinced that AGI posed an existential threat, it could launch a pre-emptive nuclear strike against OpenAI.

Which brings up an important point. Convincing world leaders that AGI is a doomsday device makes nuclear war more likely. Because nuclear weapons are your best chance of survival against an emerging superintelligence. Your only hope is to fry enough electronic systems and infrastructure to prevent it from becoming self-sufficient.

You can survive a nuclear war, but not your enemy's superintelligence.

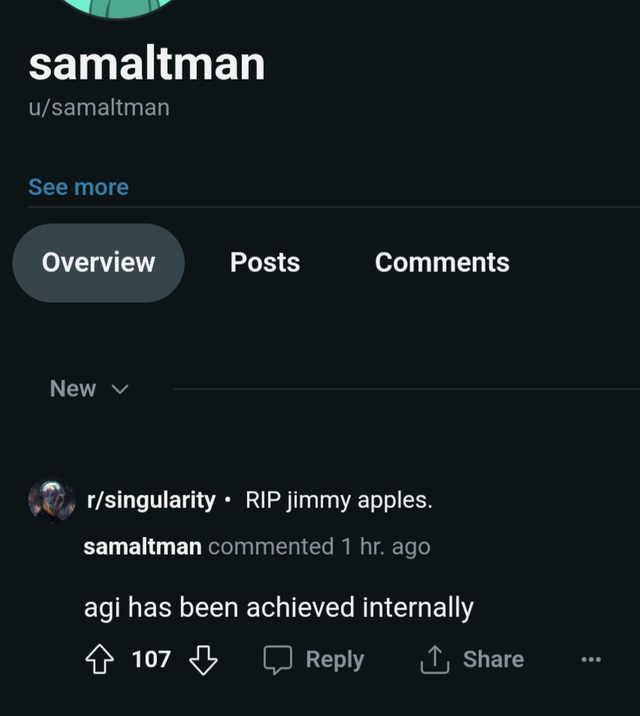

Everyone now assumes that Sam Altman was joking when he turned to Reddit to write that AGI had been achieved internally. But what if it was a distress call, and the later edit calling it a joke was made by the AGI?