Intro to Linear Regression (Part 1)

1.0 Introduction

Regression Analysis is a predictive modeling technique for figuring out the relationship between a dependent and independent variable. This is used for forecasting, time series modeling, among others. In this tutorial, we'll review Simple Linear Regression, the very first regression technique most beginners learn. You can find the rest of the tutorial here.

2.0 Linear Regression

In Linear Regression, the dependent variable is continuous, independent variable(s) can be continuous or discrete, and nature of regression line is linear. Linear Regression establishes a relationship between dependent variable (Y) and one or more independent variables (X) using a best fit straight line, also known as regression line.

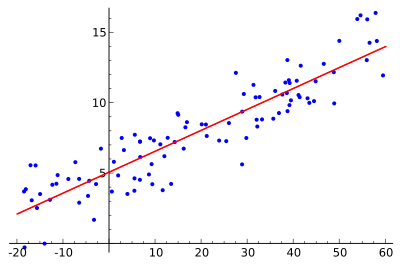

If the data actually lies on a line, then two sample points will be enough to get a perfect prediction. But, as in the example below, the input data is seldom perfect, so our “predictor” is almost always off by a bit. In this image, it's clear that only a small fraction of the data points appear to lie on the line itself.

It's obvious that we can't assume a perfect prediction based off of data like this, so instead we wish to summarize the trends in the data using a simple description mechanism. In this case, that mechanism is a line. Now the computation required to find the “best” coefficients of the line is quite straightforward once we pick a suitable notion of what “best” means. This is what we mean by best fit line.

2.1 Basic Equation

The variable that we want to predict, x, is called the independent variable. We can collect values of y for known values of x in order to derive the co-efficient and y-intercept of the model using certain assumptions. The equation looks like below:

y = a + bx + e

Here, a is the y-intercept, b is the slope of the line, and e is the error term. Usually we don't know the error term, so we reduce this equation to:

y = a + bx

2.2 Error Term

The difference between the observed value of the dependent variable and the predicted value is called the error term, or residual. Each data point has its own residual.

When a residual plot shows a random pattern, it indicated a good fit for a linear model. The error, or loss, function specifics depends on the type of machine learning algorithm. In Regression, it's (y - ŷ)2, known as the squared loss. Note that the loss function is something that you must decide on based on the goals of learning.

2.3 Assumptions

There are four assumptions that allow for the use of linear regression models. If any of these assumptions is violated, then the forecasts, confidence intervals, and insights yielded by a regression model may be inefficient, biased, or misleading.

2.3.1 Linearity

The first assumption is the linearity and additivity between dependent and independent variables. Because of this assumption, the expected value of dependent variable is a straight-line function of each independent variable, holding the others fixed. Lastly, the slope of this doesn't depend on the other variables.

2.3.2 Statistical Independence

The statistical independence of the errors means there is no correlation between consecutive errors.

2.3.3 Homoscedasticity

This refers to the idea that there is a constant variance of errors. This is true against time, predictions, and any independent variable.

2.3.4 Error Distribution

This says that the distribution of errors is normal.

2.4 Correlation Coefficient

The standardized correlation coefficient is the same as Pearson's correlation coefficient. While correlation typically refers to Pearson’s correlation coefficient, there are other types of correlation, such as Spearman’s.

2.5 Variance

Recall that variance gives us an idea of the range or spread of our data and that we denote this value as σ2. In the context of regression, this matters because it gives us an idea of how accurate our model is.

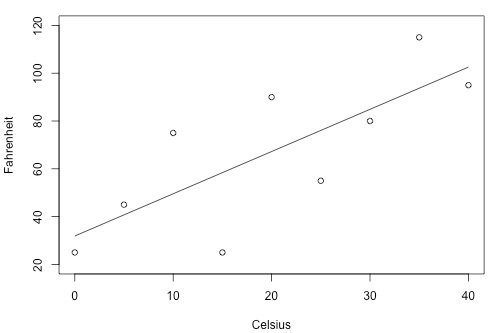

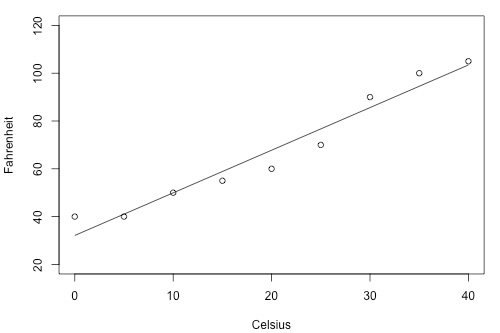

For example, given the two graphs below, we can see that the second graph would be a more accurate model.

To figure out how precise future predictions will be, we then need to see how much the outputs very around the mean population regression line. Unfortunately, as σ2 is a population parameter, so we will rarely know its true value - that means we have to estimate it.

2.6 Disadvantages

Firstly, if the data doesn't follow the normal distribution, the validity of the regression model suffers.

Secondly, there can be collinearity problems, meaning if two or more independent variables are strongly correlated, they will eat into each other's predictive power.

Thirdly, if a large number of variables are included, the model may become unreliable. Regressions doesn't automatically take care of collinearity.

Lastly, regression doesn’t work with categorical variables with multiple values. These variables need to be converted to other variables before using them in regression models.

Source: https://learn.adicu.com/regression/#20-linear-regression

Not indicating that the content you copy/paste is not your original work could be seen as plagiarism.

Some tips to share content and add value:

Repeated plagiarized posts are considered spam. Spam is discouraged by the community, and may result in action from the cheetah bot.

Creative Commons: If you are posting content under a Creative Commons license, please attribute and link according to the specific license. If you are posting content under CC0 or Public Domain please consider noting that at the end of your post.

If you are actually the original author, please do reply to let us know!

In order to prevent identity theft, identity deception of all types, and content theft we like to encourage users that have an online identity, post for a website or blog, are creators of art and celebrities of all notoriety to verify themselves. Verified users tend to receive a better reception from the community.

Any reasonable verification method is accepted. Examples include:

Thank You!