We Need to Stop Putting Blind Faith into Big Data

Amid the promises for a better world, big data is starting to show a darker side. Although killer robots aren't involved, machine learning algorithms are making decisions that adversely affect people's careers, civil rights, and criminal justice--often with terribly unfair results. And as the problems continue to mount, corporate executives and government bureaucrats hide behind a wall of secrecy, mathematical complexity, and irrational overconfidence.

Mathematician and data scientist Cathy O'Neil explains the issues in a recent TED talk, available at https://www.ted.com/talks/cathy_o_neil_the_era_of_blind_faith_in_big_data_must_end. After briefly summarizing the video presentation, this article takes a look under the hood of one popular ML algorithm and shows that fixing the problem, perhaps with greater transparency and third-party audits, is not as easy as it first sounds.

Brief Recap of the Video

O'Neil reviews a number of case studies that show how things can go very wrong with big data. These include:

- Teachers in New York City and Washington, D.C. are being evaluated by a machine learning algorithm to determine performance (and firings). Private companies developed and sold the algorithms to public administrators, who refused to divulge how the algorithms worked. A third-party audit showed that the algorithms were junk, amounting to little more than a random number generator.

- Judges in Florida are using a machine learning algorithm to determine the recidivism probability of offenders. A judge would increase the length of an offender's sentence if the algorithm returned a high score. However, the algorithm was based on data that had a racial bias, since more police were patrolling poor, black neighborhoods, and white offenders were not charged as often as blacks.

- A corporate HR department uses an algorithm to evaluate employees. An employee is rated as successful if two data points are satisfied: remained at the company for four years, and received a promotion during that time. However, the algorithm subsequently ignores a disturbing trend in the company’s culture that inhibits the promotion of female employees. In effect, an algorithm based on this company’s data now embeds a bias against women.

In conclusion, Cathy O’Neil calls for transparency in the data science field. People adversely affected by algorithms should have some recourse to challenge them. Also, these algorithms should be subject to third-party audits that would do the following:

- Check data integrity (investigate whether the data was collected with a racial bias).

- Audit the definition of success (ask if the success criterion is perpetuating previous bad/illegal practices).

- Evaluate accuracy (ask how often errors occur, and for whom does this math model fail).

- Determine the long term effects of feedback loops (like Facebook’s failure to consider what would happen in the long run if they only showed us what our friends post).

But auditing machine learning algorithms raises new questions and potential difficulties. To see this, let’s review how a machine learning algorithm is typically constructed. For those new to the subject, don’t worry. I’ll keep the discussion at a fairly high level. Try not to get bogged down in the details, because I'm not really giving you enough information to write the source code to implement one of these yourself.

The 35,000 Foot View of Machine Learning

You're familiar with computer programming, right? Maybe you've seen blocks of "code" on web sites. Well, machine learning is a very different kind of beast.

Classical programming is an approach to problem solving that treats a computer like a mindless entity only capable of carrying out very explicit instructions. The human programmer works out a solution ahead of time that must anticipate every type of possible input from a user, every type of logical decision that must be made based on one condition or another, and every type of error that might occur along the way. The programmer then writes step-by-step instructions (i.e., source code) transforming those inputs (e.g., a request to withdraw money from a bank ATM) into some final result (e.g., lowering the money tray with five $20 bills). The key observation here is that, in order to determine how the computer made a decision in any given instance, all that you need to do is look at the source code.

However, in the world of machine learning, the computer is not a mindless entity. It is given a framework of a "mini-brain," and it will use that mini-brain to observe some small part of the world around it, learn patterns in these observations, and then make predictions and/or generalizations when it encounters new, never-before-seen observations. To implement this in a computer, the programmer writes a much smaller number of instructions, and they really have nothing to do with the main task (e.g., predicting real estate values, classifying an image, or interpreting spoken language). Instead, these instructions do little more than wire-up this mini-brain and show it how to learn.

Let's look at a neural network (multilevel perceptron) as an example. We will use it to predict the sales price of residential real estate based on square footage, number of bedrooms, and number of bathrooms.

Building a Neural Network

The term "neural network" might sound a bit technical and futuristic and, maybe, intimidating. But it really isn’t. For one thing, it comes from a terribly simplistic view of neuroscience as it existed a few decades ago. The "neurons" in this network are nothing more than two numbers and a function. The two numbers are a weight and a value. The function is called the activation function. Conceptually, one might imagine an input value (perhaps an electrical voltage) traveling down a dendrite, along which it is multiplied by a weight. It arrives at the cell body and is stored as the value. Next, we determine the output from this cell by its level of activation. We supply the cell body value to the activation function, and the result (another electrical voltage) goes out on the output axon.

Be assured that all of this brain vocabulary is conceptual: none of it is actually implemented in code. In reality, matrices are used to store the weights and the cell values. The rest is an exercise in linear algebra and calculus (differentiation). So, you won't really find any neurons in the code. It’s all just math.

Let’s look at how a programmer might create a neural network for our little real estate prediction problem:

- Create a layer of neurons by populating a matrix of weights (with random numbers) and a matrix of values (all zeroes). We’ll need to multiply lots of weights and values together, and this is done most efficiently using matrix multiplication.

- Add more layers to the network. The output matrices from one layer become the input matrices for the next. Add enough layers, and people at Google will call your network “deep.” (Sorry to disappoint, but that’s all that “deep learning” really means: neural networks with lots and lots of layers.)

- Add the activation function. This function will be applied to every “neuron” before its final value is passed to the next layer. Researchers have tried a number of such math functions in the past. The logistic function is an old favorite, but a lot of the cool kids today use something called ReLU.

- Connect each input (square footage, bedroom count, bathroom count) to each of the neurons in the first layer.

- Write the code that feeds input data forward through the “network,” using matrix multiplication to multiply the weights and values. Apply the activation function, and pass the final results to the next layer.

- Write an output layer that converts the outputs from the final layer into some meaningful result. In this case, it might be a suggested sales price. In other applications, this final result might be the probability that a particular object is recognized in an image.

- Write a cost function to train the neural network. This cost function gives us a way to compare predictions made by the network against some real world data that we’ve already collected. The higher the value returned, the worse off the prediction was from the real world sales price.

- Train the network by running the data from past sales through it. The network makes a prediction of a sales price from each set of inputs (square footage, bedroom count, bathroom count), and then it uses the cost function to see how far off the prediction was from the real world sales price.

- To learn from each mistake, the network distributes the training error backward layer-by-layer, dividing it up among all of the "neurons." The idea is that, if only we had started with more accurate weights, the predicted sale price (made by the network) would have matched the real world sales price (as seen in the training data). So we work backward through the layers and make tiny adjustments to each weight. If a weight had led us to a correct result, we "reward" it by increasing it a bit. But if the weight had contributed to a bad result (as determined by the cost function), we "punish" it by reducing it a bit. Incidentally, this training approach is called backpropagation. Using backpropagation, we can determine the precise amount that any weight, at any layer, contributed to the final result. More specifically, we calculate the partial derivative of each activation function (each layer might conceivably use a different function) and apply the chain rule from calculus.

What Do We Audit?

After thinking about how neural networks are built, one might wonder, “how do you audit something like this?” After all, there’s nothing in the source code that tells you how the neural network makes any of its predictions. The only thing that really affects any given decision is the collection of weights that are set during training.

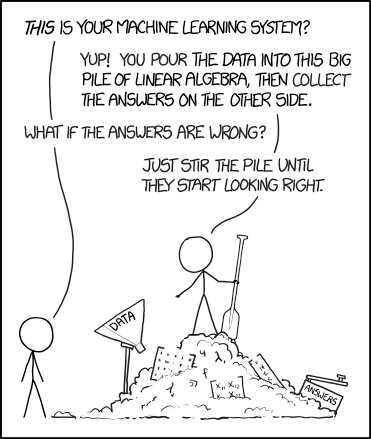

To a certain extent, our “machine learning algorithm” looks much like a little scientist furiously twisting dials on a huge machine. It reads input data from the left side, does something with it based on the dial settings, and spits out the results on the right side. But my favorite way to picture machine learning was created recently by Randall Munroe of xkcd fame ...

Credit: Randall Munroe, xkcd, "Machine Learning," Installment No. 1838

But can we really audit the algorithm by looking at the weights? Unfortunately, no, because the weights are not deterministic! Any time we try to create and train the neural network from scratch, the weights will change in value depending on such things as how they are (randomly) initialized, and the order in which the training data is fed into the network.

To audit this machine learning algorithm, you would need the following:

- The network architecture (number of layers, number of neurons at each layer, the activation functions, and the cost function).

- The weights being used in the current network, (which can be used to run simulations).

- Statistical analyses of the training data, looking for problems such as collection bias.

Conclusion

Machine learning algorithms are not true, objective, and scientific. They are opinions about the world embedded in code. They are subject to error based on biased data collection, poor implementation, faulty training, overfitting, and a host of other reasons. That's why any algorithms that make decisions impacting our lives must be transparent and open to third-party audits. The challenge for data scientists is to provide the tools and information required to perform audits and visualize their results.

This is huge and greatly needed. Humans are unpredictable and using an algorithm (no matter how complex) can never get the whole picture as far as I'm aware. There is something about human interaction and reasoning that give us something a machine can not.

Call it intuition or whatever but the connection that is made can show us if someone is trying to trick us or are genuinely honest. A machine can be tricked or gamed. Then you have the side were someone could be falsely labeled by a machine where a human could have found they were telling the truth.

I work in a big tech company doing human work because the machine is not able to do everything perfectly and in many situations we, the humans, need to pick up the slack.

Great post, keep up the hard work!

<3 J. R.

Thanks for the kind comments, JR. I agree with your comment about human intuition, though at times I see something interesting in the neuroscience and bio-inspired ML literature. As we struggle to create better neural nets, we start learning more about human intuition. Sometimes the parallels are spooky.

But what machine intelligence today really lacks is cognition. None of the AI out there right now, even the state of the art stuff, gives the machine an awareness of what it is, why it is making decisions, and what the consequences of those decisions might be. A self-driving car would happily drive you straight into a truck, just as soon as it would stop the car at a red light, if its training never covered its current sensor inputs.

And yes indeed, machine learning algorithms can be tricked. There is a whole area of research devoted to this, especially in computer vision, coming up with ways to fool ML algorithms (e.g., adding "white noise" to an image that is imperceptible to humans, but causes bedlam for the algorithm).

@royrodgers has voted on behalf of @minnowpond. If you would like to recieve upvotes from minnowponds team on all your posts, simply FOLLOW @minnowpond.

@originalworks

The @OriginalWorks bot has determined this post by @terenceplizga to be original material and upvoted it!

To call @OriginalWorks, simply reply to any post with @originalworks or !originalworks in your message!

To enter this post into the daily RESTEEM contest, upvote this comment! The user with the most upvotes on their @OriginalWorks comment will win!

For more information, Click Here! || Click here to participate in the @OriginalWorks sponsored writing contest(125 SBD in prizes)!!!

Special thanks to @reggaemuffin for being a supporter! Vote him as a witness to help make Steemit a better place!

Congratulations @terenceplizga! You have completed some achievement on Steemit and have been rewarded with new badge(s) :

Click on any badge to view your own Board of Honor on SteemitBoard.

For more information about SteemitBoard, click here

If you no longer want to receive notifications, reply to this comment with the word

STOP@mrainp420 has voted on behalf of @minnowpond. If you would like to recieve upvotes from minnowponds team on all your posts, simply FOLLOW @minnowpond.