How Social Media's Predictive Algorithms Are Perpetuating Division Among The People

Is it useful to have only the need to type a few letters into a search bar before an algorithm informs us of what we're looking for?

Is it helpful to have a piece of computer code decide what we are interested in, so that we needn't look beyond our facebook home feeds to find news and information?

Many have argued the advantages of such predictive algorithms. Convenience, ease-of-access and time saving are the typical phrases we hear on the lips of those in support of them.

Whilst they can be seen as beneficial through the limited scope of these factors, we must also consider the negative implications that accompany those useful elements.

Tunnel Vision

Reality is a completely different experience for each and every one of us. Born into a home with over seven billion people, our sense of individuality is defined by the way in which we perceive the world and everything in it. Uniqueness is certainly not a bad thing, but when that uniqueness comes at the cost of our understanding of our environment, then we lose sight of the fact that it is being unique that makes us all the same.

We are united in our individuality. Our difference to one another is the only thing that each and every one of us on this Earth have in common. This understanding should really be the force that gravitates us towards one another, but unfortunately, far too many of us see our dissimilarities as a reason to push each other away.

Our understanding of the world is limited to the number of perspectives from which we can view it. To see the world through another's eyes, is to discover a part of the world that was previously unknown to us-- thus, enriching our own perspective and bettering the grasp we have on our surroundings.

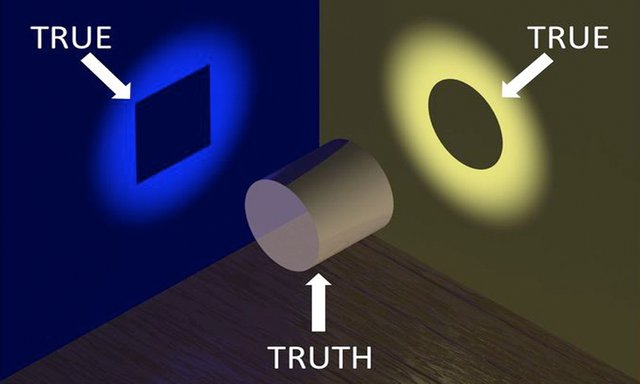

This image is often used to demonstrate how two people discussing any given topic, can both be correct in their assertions. However, I have found that the truly profound lesson to be learned from this image, is far too often overlooked.

The world is a very complex place, with far more than two truths. So consider if you will, that there were a million different perspectives from which we could view this shape to perceive a different result. Only through our movement from one vantage point to another, could we start to develop a better understanding of the true shape, in all its complexity.

In applying this philosophy to our understanding of the world, we realise that truly immersing ourselves in the perspective of another person equates to viewing the depicted shape from another angle, and improving our own knowledge and understanding of what's in front of us.

The Trap

Much like the unsuspecting mouse, lured into a trap by the seductive scent of freshly cut cheese, we unwittingly allow ourselves to be enticed into a prison of narrow-minded thinking. The source of our seduction is far more difficult to resist, however, for there is more than one piece of bait tempting us towards that cell.

There is the prospect of having to do less— which, amidst a life of slaving away to make ends meet, is a very appealing thing. Who wants to come home after a hard day at work and then exert even more time and energy into sifting through the internet in search of something valuable to read or watch? No one. Not when we can simply put our feet up and click away at items on our facebook home feed, or the recommended and related videos on YouTube.

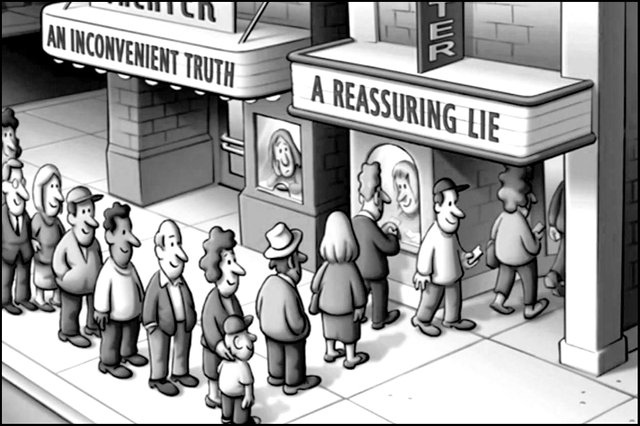

Reassurance is yet another temptation provided by such algorithms. No one takes pleasure in doubting themselves, as cognitive dissonance can be a very unsettling feeling. Why should we bother to invite criticism of our beliefs? It's much easier to have an endless string of suggested news and information solidify those beliefs for us.

Lastly— and perhaps the most tantalizing of invitations offered by predictive algorithms, is the sweet allure of comfort. By allowing a social media platform to present us with solely the types of information we adhere to, we also—for the most part—remove ourselves from the possibility of interacting with those who have differing opinions.

We can all find comfort in the avoidance of debate. It is hard work burning those extra calories in an effort to discern another's opposing point-of-view. But through our social circles, suggested videos and recommended news feeds, we are able to find only those that were led there by possessing a similar perspective to our own. This minimizes the necessity to indulge ourselves in critical debate and challenge our current paradigm.

Side-Effects

The price we pay for the comfort provided by predictive algorithms is often oblivious to us until an unexpected moment. We believe everything is fine, until a single dose of reality shatters our narrow-minded perspective and leaves us with a crippling inability to make sense of the world we reside in.

Leading up to the election, there were millions of people, in the US and elsewhere, that could have told you without a doubt that Donald Trump was going to win. There were even thousands of videos and articles in circulation that predicted this same outcome with evidence to support the claims.

However, the news of his victory shocked a great number of Hillary fans to their core, provoking them to tears and far worse. Many of the Hillary supporters even took to the streets in outrage, protesting a democratically elected president— essentially calling for a dictatorship instead.

Their actions were mostly the result of severe shock. It was impossible for them to comprehend how Trump could have won— or more accurately, how Clinton could have lost. Social media algorithms had determined that they were Hillary Clinton supporters, and as such, their home feeds and suggested links, articles and videos had presented them only with positive information about her, and negative information about Trump.

They had fallen victim to the trap, believing that the information made available to them was everything they needed to know. Through reading comments on the pro-Clinton posts and videos they were directed towards, they viewed an overwhelming amount of support for their candidate which instilled within them the belief that they were part of the majority.

On the opposite side, those who had been branded as Trump supporters by social media's mathematical predictions, would have only the brighter side of Trump and the many crimes of Clinton presented to them. On the videos that they watched and the posts that they read, no Clinton supporters could be found. Many were surprised to find that Clinton had any supporters at all.

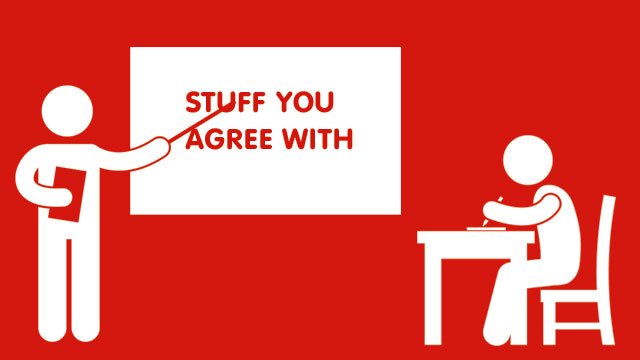

These algorithms which determine our interests, have in effect become little more than self-perpetuating systems of confirmation bias. We are shown what we like and what we agree with. We are shown what our close friends like and agree with. We are shown other people who like and agree with those same things. Everything else doesn't exist, or at least doesn't matter. That is of course, until the day we realise that it does matter, only we were not ready for it.

The Divide

Every day that we avoid conversation with those who have conflicting opinions to our own, we place the next proverbial block in the barricade to communication we are constructing. Before long, that wall is so high that it has become far too difficult for us to converse with anyone holding a dichotomous perspective to our own without getting incredibly angry.

We are becoming too familiar with surrounding ourselves with people who are like us. Consequently, our belief structures are decreasing in fluidity. Where as once we would be happy to update our beliefs to something more accurate upon the reception of new information, now, prolonged detention within social bubbles have solidified those beliefs to a point where it is too unsettling a feeling to have them altered.

We cling to our current paradigms so tightly that to be exposed to an unfamiliar conversation, that has the potential to shake the foundations of our belief structure, provokes a feeling of discomfort which forces us to lash out in defense. This is why it has become so difficult for us to talk to those with conflicting view-points.

Herein lies the greatest problem that we are faced with. The difficulty we now have in communicating with one another is driving us deeper into the social bubbles that made it difficult in the first place. If we do not commit to building bridges to one another, and desist with building barriers, in the coming decades, as we create more labels to define ourselves by and those social circles get smaller and smaller, we will wake up one day and find ourselves truly alone in a world of billions.

We must force ourselves to converse with others, especially those we are in disagreement with. Whilst it can be unpleasant at first, with each debate we enter into with an open mind, our grasp on our take of reality loosens, and the flexibility is restored to our belief system. I believe this is healthiest state of mind that one can possess. Only through this state of being can we begin to view the world through the eyes of other's, and in turn be rewarded with a better understanding of the world. Along this journey, we may also find that a wider perspective adds a colour and vibrancy to the world that was previously unseen to us.

One just has to watch how the Netflix algorithm works from a new account to see this in action. It seeds itself with a few questions it asks - what genre do you like? Then it makes suggestions. Once you follow one of those suggestions, it suggests things that are somewhat related (by subject mostly). Over time you land up in a very narrow band of stuff based on what you chose. The star rating system is based on your propensity to watch - though it gives the impression that it is an external rating.

The only way to break out of this narrow band is to ask other people what they watch or hit the search button and find new stuff.

You're right. I had only thought to mention social media, but in truth it is just media in general. Great observation.

I've been thinking about the social effects of recommender for a while now, and I appreciate the way you've treated it. I hadn't thought much about this self-reinforcing problem, but that's definitely a concern.

Something that occurred to me last year sometime is the possibility that you could develop a recommender algorithm and get everybody using it, and then subtly, imperceptibly, tweak the recommendations that it makes to people in an effort to steer them to think how you want them to. I'm sure it's possible, and I suppose it's probably what ad agencies are doing right now.

Yeah I believe things are slowly getting worse, and that something needs to be done before the damage is irreparable.

I worry that your suggestion may not be the best course of action though.. It's essentially a form a manipulation. I think we have had enough of deception and we need to head towards an era of truth. If we are going to repair lines of communication between our species, then it must be done willfully.

I think the best we can hope for is to spread the message. But, what we really need, is a way to communicate that message to people in a way that they will take heed. I know that this article is the conduit for which we will see change.

But, if it can be the message that inspires another to send a message which then causes another to create that message that everyone will hear, then I can say that I did my part... If that makes sense..

Oh god no, I didn't mean it as a suggestion. I meant it as a warning!

Yeah, I have no idea what the solutions are. It's just so easy to isolate ourselves from opposing viewpoints these days! It's a very tricky set of problems, and I think your approach of spreading the news person to person is the only one that I can definitely believe in. Thanks!

My apologies. I must have misunderstood.

Yes, it's certainly the hard way. But perhaps the difficulty in regaining what we have lost is what--if achieved--will remind us not to make the same mistakes again.

Should it be easy, we would not appreciate it nearly as much.

I like your approach. I think Steemit would need as much psychology of human behavior as algorithms.

Well I know I don't want to see Steemit become the same type of confirmation bias machine. It's good to have communities where you can find others who have similar interests that you do. But I feel we need to be careful not to shut the other communities out of our world completely.

This is a great post. I agree with you 100%. Steemit is very interesting because we are forced to look at stuff that doesn't fit in with our world view. I think that's why there's so much conflict. I would assert that only through clashes can we truly learn anything new about others and ourselves. But one has to achieve a certain level of maturity to recognize and admit when one is wrong. The ego when allowed to roam unchallenged, is dangerous.

Yes. Very well said. I too consider the ego to be an obstacle to progress in a great number of ways, especially when it comes to communicating efficiently with others. I feel we could all benefit from a healthy dose of humility.

The next article of this type that I planned to do was actually one pertaining to the ego, so I find it interesting that you touched on it here.

Thanks a lot for the insightful comment.

Excellent post... Resteemed.

Thanks a lot. I just seen a notification for a post you tagged me in, but, I'm dealing with some little devils right now, so I shall have to read it a bit later.

It was me going head on with bernie. I have your comment from @dantheman's post in my post.

fantastic article !!

Thank you. I appreciate the appreciation. Lol.

Just to add (phenomena called cyberbalkanization)

https://en.wikipedia.org/wiki/Splinternet

Thanks for sharing that. I had never heard of the term before, but after reading up on it, I can say that it certainly applies here. It would appear that it is far more than just media sites that are causing this division. The most important question now I guess is; is the division intentional?

Self-organization, limited time and cognition causes these bubbles. Wrote about this back as one of my papers back in 2003/2004, can't remember. (been happening since early internet bboards) Algorithms, if profit driven are just making it "worse". Imo, my approach is - the world's too damn messy for sustained control. So might be intentional, but most likely it wouldn't go "according to plan" after some time.

Edit: but the paper i wrote was more about Human Behaviours in Virtual Space (on the Internet) . Big data and stuff weren't big back then, but people were experimenting and communicating, hence why there are splinters, it's bound to happen (can't imagine an internet without its little pockets of chatrooms, forums, groups, etc).

Thinking about it now, my paper is probably already obsolete. Data sciences and blockchains are certainly changing up the scene.

Yeah. Facebook were really the pioneers of this sort of thing, and with all the psychological experiments they have conducted on their users since its conception, it's very difficult to believe that they are not aware of the division that their algorithms are causing.

I'd have to say I'm leaning towards it being a deliberate wedge driven between the people. Understandable too, because they day we surpass our petty labeling of ourselves and others and truly begin to unite, is the day that we start to make changes in the world that the people currently running it do not want to see.

Digital ghettos, digital plantations, divergent reality tunnels, exclusionary belief systems, it's amazing that people living side by side, with roughly the same intelligence, can come to see the world in such incredibly different ways.

Thanks for the post.

Yes, I believe amazing is the correct word in this case.

Thanks for comment.

Good job here, you make some good points. Perhaps we should do away with predictave algorithms. Make people use their brains more often. You know what they say use it or lose it.

My sentiment exactly.

Thanks for reading.

Interesting and very well written! And it is true we need a era of truth though I have no clue what that would lead to. But recently, I was reading about the Satya Yuga, or the "era of truth", as explained in Sanskrit Vedic texts and found it to be a pretty close to the way I see truth myself.

You may google Satya Yuga and wade through the results to get a better understanding.

But you Sir, have given me an idea for my next post!! :)

Is that supposedly followed by a Golden age of higher human consciousness? I feel I may have come across this before.

Afaik, it is the golden age!

Ahh. Close then!

I do remember coming across this in my research some time ago. I found it interesting, because it certainly does feel as though we have somewhat of a mass awakening. But, I wonder if that is just my own tunnel of reality, as described in my post, that has led me to believe in this mass awakening...

Oh-- And I'm very glad to have inspired some creative thinking on your part. I consider this to be one of the primary motivators for writing so it's good to hear that my work can inspire others to create some of their own.