Attention all community admin moderators.

Recently a matter has caused great complications. I see a lot of discussion about this lately. Our detective team has already started working on it. I am talking about AI generated content. Content generated by ChatGpt or other AI has caused great concern. This is undoubtedly a bad thing for a blogging platform.

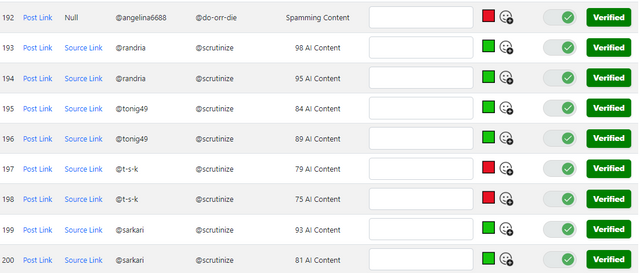

Many people may not even imagine how much AI generated content is being published here constantly. We are totally shocked. Which is a lot. You can find it by visiting our website: http://steemwatcher.com . A lot of reports are being submitted here every day. We are searching every day. When the detectives submit the reports on the portal, I have to verify them. I give a green signal when I conclude that it is AI generated content. After receiving the green signal, the detective comments there.

It is a simple process. But AI generated content makes me think a lot. I have to think a lot to come to a decision. Because AI generated content detection tools are not always accurate. There are many errors here.

A post came to my attention this morning. There are claims these tools provide completely unfounded results. A post from @steemitblog is shown as a reference. I am giving the link of the post, you can have a look. https://steemit.com/hive-150122/@growwith. Here the user claims that these tools claim @steemitblog posts as AI generated content. Yes I would say he is right. Not only the @steemitblog, if everyone checks their own content here, then the maximum content is claimed to be some percentage fake by these tools. So are we all writing content using AI tools??

Never .. Keep in mind that these tools have not been developed for very long. They don't work perfectly. You can see the description of these tools in this regard. They themselves clarified the matter. So a question arises here. How do I make a decision when giving a signal??

When a detective submits a report I check that content with multiple tools. A very important thing here is that when detector tools claim that 80% to 100% of a content is AI generated content, I only give it a GREEN signal. I don't give green signals when I'm confused. And I will not claim that my decision is 100% correct in those which are given green signal. Maybe I'm wrong. Or the tools may show wrong information. I always use multiple tools to avoid wrong decisions. I publish my conclusions when all the tools give close conclusions. I use the following three websites to check.

1.https://gptzero.me/

2.https://www.zerogpt.com/

3.https://openai-openai-detector.hf.space/

When these tools claim that any content is AI generated content and it is less than 70 to 80 percent then it is not right to report it. I have found many proofs that are original content but these tools claim that 40% or 50% is AI generated content.

Through my writings today I just want to make sure that, when we call a content 'AI generated content'? Sometimes a decision has to be made using the results provided by the bot and our own IQ. It would be wrong to publish only what I saw. However, the company claims that the tools are being updated further. I believe these will give us hundred percent accurate information someday. Until then, various criticisms will be discussed about it. Admins and moderators of every community must be careful about this. Someone should be found guilty after verification, not before.

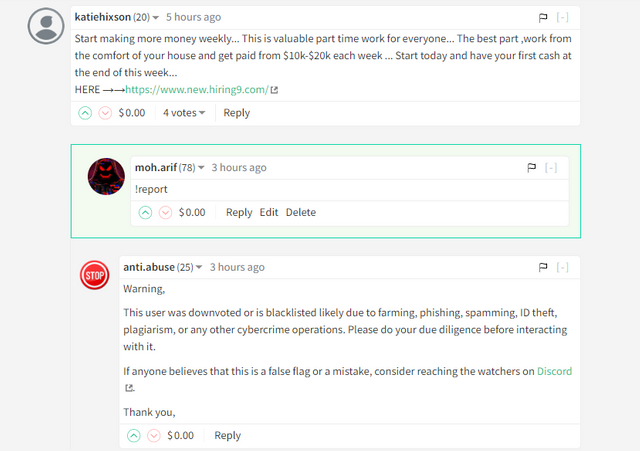

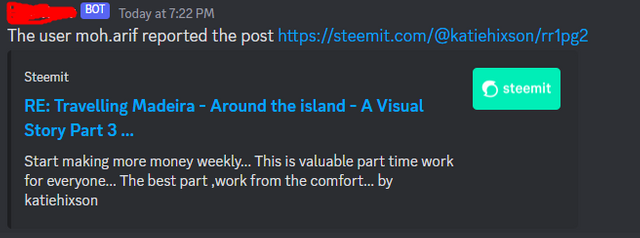

However, I will now talk about a feature of Abuse Watcher. When you see any abusive activities, write this command (!report) under the content. Then the link of that content will immediately go to a private channel on our official Discord server. We will review that link and take steps. I have noticed that many people are giving wrong commands. As many are writing #report, #report,! report It should be written only (!report).

|  |

|---|

Every community must be vigilant enough to stand up against abuse. I will request every community admin, moderator to support Abuse Watcher. Only collective efforts can eradicate abuse. Thanks everyone.

Cc: @rme , @steemcurator01

[@scrutinize, @detectcrime , @shadow-the-witch, @fly-dragon ,@detective-shibu]

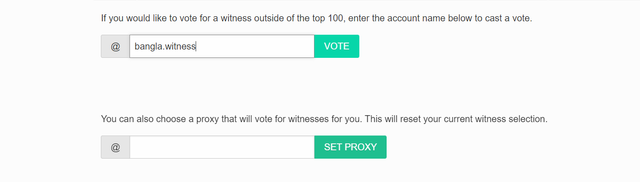

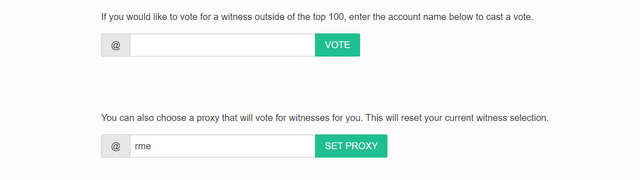

VOTE @bangla.witness as witness

OR

| 250 SP | 500 SP | 1000 SP | 2000 SP | 5000 SP |

Generally we look for 90%+ fake readings to be more certain.

Also testing paragraphs individually helps - usually excluding the beginning and end of a post as people will often write those themselves to try to disguise the AI content.

I agree with you. We can understand an ai generated content in many ways. There are many techniques to detect them.

Some users change parts of their content to hide abuse. But it can be understood by observation A user written text looks one type, AI generated content words are slightly different.

This exactly what I told some of my team members, that at times we don't even need tool to detect AI content. Because machine language will always differs from humans'

Rightly said.

In some cases it is easily identified.

Yea I thought it only me that discovered it, I was telling a friend that users are using smatter means to deceive the ai detector and plagarism detectors.

That why I prefer starting my check from the center of the post, on the most complex topic. Here you easily get them red handed.

Others write their post together without much space, this also affect the effectiveness of the detectors but as bro rex said when using multiple detectors, ai content would easily be fetched out.

At times an a I content can also be detected by carefully reading and studying the post.

#crimefreesteemit

Thank you so much for the information. ❤

💝

CC : @steemitphcurator , @loloy2020 @juichi

Thank you very much for the great information. You are right that these tools are not 100% accurate. So, we must combine the results from different tools and if still there are more than 80% chances of the content to be created from AI then we should report.

Thanks again, we must be very much careful in this regards.

Thank you for your feedback.