Where does big data come from?

Content

The modern business market is a data-driven environment. It can be said that no matter how the technology is updated, data has an irreplaceable position, and it is nonsense to talk about big data without data. A big data platform without data support is an empty shell. Either the company's internal data or external data can constitute the source data of our big data platform. The data sources of the big data platform mainly include databases, logs, front-end embedded points, and crawlers.

1 Import from database

Before big data technology became popular, relational database (RDMS) was the main way of data analysis and processing. Since its development, database technology has been quite perfect. When big data emerged, the industry was considering whether database data processing methods could be applied to big data, so big data SQL products such as Hive and Spark SQL were born.

Despite the emergence of Hive big data products, business data is still stored in RDMS during the production process. This is because products need to respond to user operations in real time and complete read and write operations in milliseconds. Big data products do not respond to this situation. . At this point, you may have a question, how to synchronize the business database to the big data platform? Generally speaking, we use real-time and offline collection of business data to extract data into the data warehouse. Then perform subsequent data processing and analysis. Some common database import tools include Sqoop, Datax, and Canal.

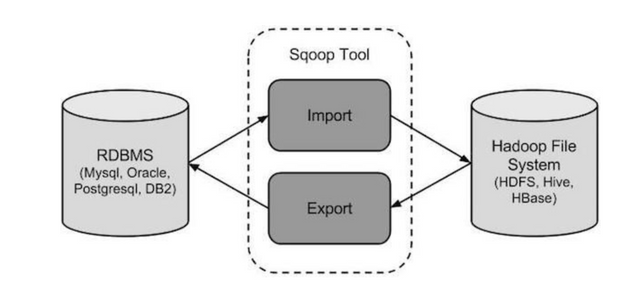

Sqoop is a tool of Apache to transfer offline data between Hadoop and relational databases. Realize data synchronization between relational databases (MySQL, Postgres, etc.) with Hdfs, Hbase, and Hive of the Hadoop cluster. It is a bridge connecting traditional relational databases and Hadoop. Similar to sqoop, datax also performs offline data transmission and supports data synchronization of Alibaba database series.

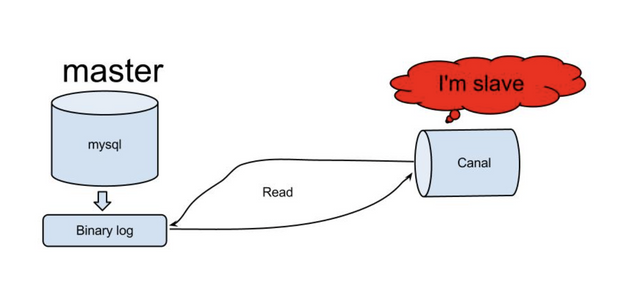

Canal transfers data to the big data platform in real time by reading MySql's BinLog log to achieve real-time data intervention.

2 Log import

The log system records every status information of our system operation in words or logs. These information can be understood as traces of business or equipment behavior in the virtual world, and log key business indicators and equipment operating status. Information is analyzed.

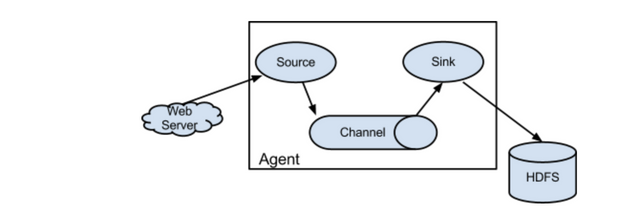

Apache Flume is a commonly used tool for big data log collection. It can be seen from the figure that the core of Flume operation is Agent, with Agent as the smallest independent operation unit. Agent mainly consists of three components: Source, Channel, and Sink.

Source: Collect data, encapsulate the data as an event and send it to the Channel. The data source can be enterprise server, file system, cloud, data repository, etc.

Channel: Generally, the reading speed is faster than the writing speed. Therefore, we need some buffers to match the difference in read and write speeds. Basically, Channel provides the function of a message queue, which is used to store events sent by Source, sort events and send them to Sink.

Sink: Collect data from Channel and send it to big data storage devices, such as HDFS, Hive, Hbase, etc. Sink can also be used as a new Source input source. Two Agents can be cascaded to develop various processing structures according to requirements.