Programming Diary #34: Your post now has a permanent payout window

Background

Steemizen: The Trending page sucks and I want rewards after 7 days.

Developer: I'll make a front-end.

For almost as long as I've been here, people have complained about the same two things - the Trending page and rewards on a post drying up after 7 days.

I have spent a lot of time thinking about the first problem, but not so much about the second. People think the solution to the trending page is simple and obvious - put an end to the paid voting bots. In reality, that's easier said then done. Instead, I have thought for a long time that the long term solution to the trending page is a new generation of voting service that doesn't create dysfunctional incentives for authors and investors. I had lots of ideas about what that might look like, but none of them were clear enough for implementation (with a zero dollar budget) - until recently.

Three weeks ago, I had a manageable idea for a voting service that encourages legitimate content creation and two weeks ago, I was motivated to get steem-python working on windows. This led into last week, when I used Steem-Python to write a baby-prototype version of a voting service that could provide passive rewards to investors and spur legitimate creators. To be clear, at this point I wasn't really focusing on the second problem at all. Here's the roadmap, as I posted it last week:

Phase 1: Use AI and other automation to find interesting active content, post about it, and deliver beneficiary rewards to the authors (and continually refine it to drive towards finding the best content).

Phase 2: Use something like PPS to deliver (passive - no posting needed) beneficiary rewards to delegators who want to support this "attractive" content.

Phase 3: Discover exemplary content that has already paid out and deliver beneficiary rewards to those authors, too - indefinitely extending the payout lifetime of a Steem post.

So, providing a longer payout window was on my roadmap, but I thought it was months or years away.

Meanwhile - on the second problem, @etainclub recently launched eversteem for rewarding people's posts. In the almost-nine years that I've been here, as far as I know, @etainclub, @knircky, and @holger80 are the only ones who even thought about the second problem.

The solutions from @knircky and @holger80 never got much traction (AFAIK). Since I can't read Korean, I don't know how eversteem is doing now, but I encourage people to try it out. IMO, this is solving one of Steem's fundamental challenges.

This week, however, I accidentally stumbled on an extension of the same concept that the others have already explored, so "months or years" in my roadmap quickly turned into "finished the proof of concept". The key difference here is that I'm not depending on simple comments to deliver the rewards. Instead, I'm carrying the beneficiaries in a post that has meaningful content.

It's been a couple of months since Programming Diary #33, so in today's post I'm skipping over a bunch of work on the SCA, and I'm going to focus just on the new stuff: Thoth.

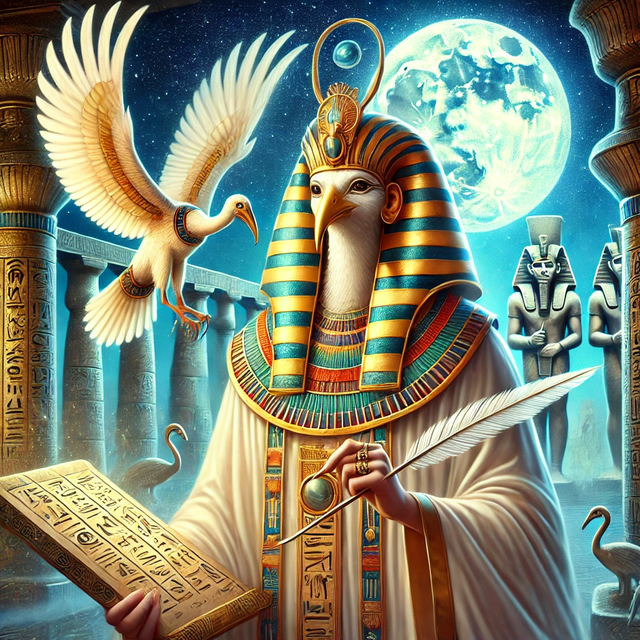

In Egyptian mythology, Thoth was the "god of the moon, wisdom, writing, hieroglyphs, science, magic, art, and judgment". Writing.... science... art... wisdom... judgment... and magic - What better mascot for a piece of code that's trying to promote legitimate content creators?

So, let's talk about my recent efforts with Thoth.

Recent Activity

The concept is this: First, create a piece of open source code that will use standard automation and AI tools to scan the blockchain for legitimate organic content and create a post to summarize it. Second, attach beneficiary rewards for the authors of the posts that get included. Third, invite "patrons" to support the service with delegations. Finally, add these patrons to the beneficiary rewards so that they can receive passive income from their SP investments.

The code will be open source so that multiple entrepreneurs can run it and they can compete on their ability to find high quality content. It's an automated version of a promoter or publicity agent.

Last weekend, I completed a very primitive implementation of the first step. In order to accomplish this, I'm using my own custom filtering to screen the candidate posts and then a free LLM from arliai for the AI processing. This week, I've had a weird work schedule, so I managed to find time for some additional improvements to the pre-AI screening capabilities.

You can see the type of screening I'm planning to enable before I engage the AI in my config.ini:

### DO NOT COPY KEYS into config.template

###

[ARLIAI]

# You can get values from https://www.arliai.com/quick-start

ARLIAI_URL = https://api.arliai.com/v1/chat/completions

ARLIAI_KEY =

ARLIAI_MODEL = Mistral-Nemo-12B-Instruct-2407

[STEEM]

# Default to @social for testing

POSTING_ACCOUNT = social

# It is recommended to set these in your wallet, using "steempy"

POSTING_KEY =

STEEM_API =

####

#### From here down copy content from config.ini to config.template

####

[CONTENT]

EXCLUDE_TAGS = booming, nsfw, test

INCLUDE_TAGS =

MIN_FEED_REACH = 200

MIN_WORDS = 500

REGISTRY_ACCOUNT = penny4thoughts

### Implemented: REGISTRY_ACCOUNT (mutes only), MIN_WORDS

[AUTHOR]

LAST_BLURT_ACTIVITY_AGE = 60 ; required days since the account was active on Blurt

LAST_HIVE_ACTIVITY_AGE = 1461 ; required days since the account was active on Hive

MIN_ACCOUNT_AGE = 30

MIN_FOLLOWERS = 50

MIN_FOLLOWERS_PER_MONTH = 3

MIN_FOLLOWER_MEDIAN_REP = 0

MIN_REPUTATION = 55

### Implemented: MIN_REPUTATION, MIN_FOLLOWERS, MIN_FOLLOWERS_PER_MONTH

[BLOG]

CURATED_AUTHOR_WEIGHT = 500

NUMBER_OF_REVIEWED_POSTS = 5

# Default to @social for testing

POSTING_ACCOUNT = social

POSTING_ACCOUNT_WEIGHT = 500

### Implemented: CURATED_AUTHOR_WEIGHT, NUMBER_OF_REVIEWED_POSTS, POSTING_ACCOUNT, POSTING_ACCOUNT_WEIGHT

### [ POSTING_ACCOUNT_WEIGHT + ( NUMBER_OF_REVIEWED_POSTS * CURATED_AUTHOR_WEIGHT ) ] must be less than 10000 (i.e. 500 == 5%)

Each Thoth operator will be able to customize their own screening parameters.

Most of that should be self-explanatory, but the one thing that might not be clear is the setting for REGISTRY_ACCOUNT. That's an account that will maintain "mute" and "follow" lists that can be used by Thoth as blacklists and whitelists. For now, I'm using @penny4thoughts mute settings to provide a blacklist, but any Thoth operator could choose their own accounts. This avoids the need to use custom_json or static files in order to maintain the list. Eventually, I'll be adding a whitelisting capability, too.

And here is the current iteration of the AI prompt that gets activated on posts that pass all the way through the screening:

Evaluate the following article based on quality, originality, and value to readers. Analyze:

- Writing quality (grammar, organization, readability)

- Originality (unique perspectives, not AI-generated or plagiarized)

- Engagement potential (interesting, informative, thought-provoking)

- Appropriate content (no gambling, prize contests, cryptocurrency, or prohibited topics)

If ANY of these conditions are met, respond ONLY with: "DO NOT CURATE."

- Content appears AI-generated or plagiarized

- Poor writing quality (confusing, repetitive, disorganized)

- Focuses on gambling, prize contests, giveaways, or any competitions involving rewards

- Contains cryptocurrency discussion, trading advice, or token promotion

- Lacks substance or original thinking

IMPORTANT: Sports competitions and athletic events are acceptable topics. Only reject posts about contests where participants can win prizes, money, or other rewards.

Otherwise, create a curation report with ONLY the following three sections and NOTHING else:

1. KEY TAKEAWAYS (3-5 bullet points summarizing the main insights using SEO-friendly keywords)

2. TARGET AUDIENCE (Who would find this content most valuable and why)

3. CONVERSATION STARTERS (3 thought-provoking questions that could spark discussion around this topic)

DO NOT add any additional comments, conclusions, or sections beyond these three. Your response must end after the third section.

Here is the article:

Only a few of the screening settings have been implemented so far, but more will follow. Also, it's an AI (and a basic one at that), so it hallucinates sometimes and doesn't always obey the prompt very well. C'est la vie. It's usually not too bad, though.

This week, however, I got frustrated with waiting for new posts to arrive on the blockchain, and it occurred to me that testing would be much faster if I look at old posts so I don't need to wait 3 seconds between blocks. And so - just like that - the problem of delivering beneficiary rewards to authors of historical posts was handled.

Test examples of the Thoth output can be seen here for active posts and here for historical posts. In both cases, beneficiary rewards from the posts are directed to the respective authors.

So, addressing the third goal was much easier than I expected, but it appears that the second goal - delivering beneficiary rewards to patron/delegators might be harder. From github, I thought that the 8 beneficiaries per post was a limit in condenser and that I could use up to 128 through python. Unfortunately, python also seems to be limited to 8 beneficiaries. This makes it difficult to fit authors and delegators (patrons) into a single post. I'm not sure how I'm going to handle that yet.

Next up

In the next two weeks, I expect to continue focusing on improving the pre-AI screening by implementing some of the other constraints that are specified in my config.ini file. I'll also be thinking about how to deal with the apparent limit on beneficiaries.

Longer term, I'll be setting it up to run on a dedicated account, adding voting, and encouraging others to make use of the tool, too. The goal here is to increase the attractiveness of the blockchain, not necessarily to generate rewards for myself. Each operator can choose their own screening criteria and use the LLM model of their own choosing, so having multiple copies running means that different posts will be highlighted and different styles of summary posts can be created.

Reflections

Why would an investor delegate to a service like this

First, if Thoth is good at picking out content, the investors should get back rewards from other voters - not just from their own delegations. This means that a good Thoth instance could - conceivably - deliver higher ROI than the current generation of voting service.

Next, if Thoth content reduces the SPAM content that currently dominates the Trending page, it might bring more content creators to the blockchain.

Third, if Thoth provides lifetime payouts to creators, it might help with user retention.

Finally, if Thoth brings more creators to the blockchain and helps to retain the creators that we have, then the investors' stake might gain in value instead of being constantly devalued the way it is in the current ecosystem.

But AI content is against the rules

This is a sticky wicket, and I'm definitely pushing boundaries with this concept. In my opinion, AI is here to stay and Steemizens should find ways to use it that will help the ecosystem. AI that masquerades as a human should definitely be discouraged, but I - personally - think that AI should be welcomed if it does useful stuff without pretending to be human.

I think that Thoth is doing useful stuff. Specifically, it offers potential improvement for two of Steem's fundamental pain points: (i) Posts don't accrue rewards after seven days; and (ii) The Trending page sucks.

Also, we don't know what he has in mind, but we know that @justinsunsteemit has been exploring the use of AI with Steemit.

Further, we have some precedents with accounts like @trufflepig or @realrobinhood (among others).

I'll be looking for feedback on this point. I definitely don't want to spend a lot of time creating a service that's just gonna get downvoted into oblivion.

But some bad posts will get promoted

True. It's unavoidable that Thoth will accidentally pick out some low quality, AI-Generated, and even plagiarized posts. But when these are discovered, parameters will be adjusted and the problem will be minimized.

The important question is, "compared to what"? In aggregate, I might be wrong, but I think that the ecosystem will be better after Thoth than before it.

Conclusion

So, that's how I spent my coding time in the last few weeks. I got steem-python running on Windows and used it to create Thoth, an AI curation assistant who will make a run at improving two of Steem's fundamental problems - the low quality trending page and the short rewards lifetime.

Let me know what you think!

Thank you for your time and attention.

As a general rule, I up-vote comments that demonstrate "proof of reading".

Steve Palmer is an IT professional with three decades of professional experience in data communications and information systems. He holds a bachelor's degree in mathematics, a master's degree in computer science, and a master's degree in information systems and technology management. He has been awarded 3 US patents.

Pixabay license, source

Reminder

Visit the /promoted page and #burnsteem25 to support the inflation-fighters who are helping to enable decentralized regulation of Steem token supply growth.

This is a very good article and you expressed some hopes that are important for the elevation of Steemit. I am not sure I understand it but it's very early in the morning here.

I would like to join in on the development and maybe later this year I should be able to do it once I get an unlimited bandwidth high speed Internet.

I took have been here nine years now and...

Thanks for the feedback, and your participation in development would definitely be welcome!

Thanks

I like it and there's plenty to think about in there.

As somebody who curates manually, and also likes your idea, what would the best way of supporting this be? Presumably in my case, I'm best to keep my STEEM Power, rather than delegate it to you? Although without a decent SP base, can thoth have a big enough impact?

Given that there are already people highlighting "active" content, should thoth focus on content that isn't active and has already paid out? This will make it unique on Steemit and therefore more attractive. In my opinion, this would also help to focus people on leaving something worth leaving, rather than something that is irrelevant as soon as the "POST" button is pressed. And should also improve the quality of what Thoth highlights.

In my opinion, the posts selected don't highlight the best of Steem in the past or at present so I think the selection algorithm will need some adjustment. Proof of Concept though and in my opinion, it's looking good.

I'd also think about attention span. I'm not lazy but I'm "Time Poor". So it's too text heavy for my liking. I wonder if the "Target Audience" is necessary for each post? Perhaps a general theme for each grouping would suffice? Generally speaking, I'll read the post title and think "'Crafting the Murderers of Tomorrow, TODAY!'" - that sounds interesting (click bait at its finest - it wasn't) and "'How $PUSS Could Change Digital Identity In The Metaverse'" sounds like one of the Nigerian Crypto Experts that I caught plagiarising has moved on to AI content creation about subjects he knows little about.

In the case of the "Active" version, the presence of this single author would stop me from supporting the post - and therefore the other authors. (Although the ChatGPT post will get read once I finish replying to you.)

I also wonder if Thoth could self-learn by analysing the success of each post it produces? Not being biased towards particular authors (presumably highlighting an author would encourage them to vote) but based upon the content / tags that are used. It might learn that #gaming attracts more readers whereas #thediarygame doesn't - eventually leading to a weighted bias in content curated. You could even introduce some bias now based upon your own opinions of the /tags page.

Final thought - It's a shame that @thoth went to some pointless account 9 years ago.

As always, an interesting article from you :-)

In fact, in the little time I've been working on improving the trending algorithm, I've often thought that an AI could perhaps recognise very well whether a post is ‘trending’ or ‘hot’ based on certain parameters.

At the moment, I can only specify rigid parameters with certain weightings. How many replies were there, how many from different authors, how many votes, how many resteems, etc.?

But an AI could evaluate the actual post and include the text, as well as the conversation in the comments. In my opinion, that would achieve much better results.

Unfortunately, I don't have the time to look at your code at the moment. But if you could teach the AI to evaluate the posts and their comments in the sense mentioned, the upper limit of beneficiaries in Python would only be a minor problem.

I am curious!

Ugh... I started this from the perspective of handling new posts, so there would be no replies or resteems to worry about. I was happily ignoring those. But, you're right that this would be a good way to improve it for the historical posts. I'd probably need a better LLM model and a machine learning step in order to really do that well, but I'll start giving it some thought. I can probably make some progress by passing the replies through the free LLM. I am also starting to think that I might need to switch from a screening-only perspective to a screening + scoring approach for the pre-AI checks - as with the trending scores.

Thanks for the feedback!

I still think you're going to struggle with the ROI issue, if delegators need to share with authors of old posts then they're likely going to be getting lower returns than the de facto 100% self-voting of the currently-established bots. I think this is potentially even worse if some of the old creators aren't even active on the platform anymore, I think delegators will find it hard to see the value proposition there. Maybe have the selection criterion focus on old posts from currently-active creators, so there's a clearer link for how the incentives could work?

On Substack Notes, the platform's social-media layer, they recently implemented a change to have it add a little "from the archives" tag when it surfaces older content into your timeline, that changed my subjective reaction from something like "why is it showing me these years-old posts!?" to "that's cool, they're trying to get people to not treat the platform as ephemeral as other social media". So I think that supports the notion that showcasing older content can make sense, but figuring out how to do it smoothly can take some work. They've said that on Substack they're trying to optimize their algorithm for paid subscription signups. Maybe tracking follower growth for Thoth-featured creators would be a useful metric for you to track to judge how well its working.

I'm not sure there's enough organically-voted SP for that to work out.

Maybe. That's why I hedged my one statement about ROI with "conceivably". I guess there's no way to know without running the experiment. I like this idea:

I'll probably add that to my list of screening parameters. I was thinking about adding a check for number of active followers, too. Also, once the whitelist feature is added, a Thoth operator could limit it to just a specific set of trusted creators - which offers a more direct patronage style of relationship.

You're way ahead of me here. This is definitely a good idea. I hadn't even started thinking about measuring success.

Probably true, but hopefully not forever.

Get Your Steem Posts Featured!

Selected by SteemChat.org