Towards the biological and machine interface

Towards the biological and machine interface

Souce

Souce

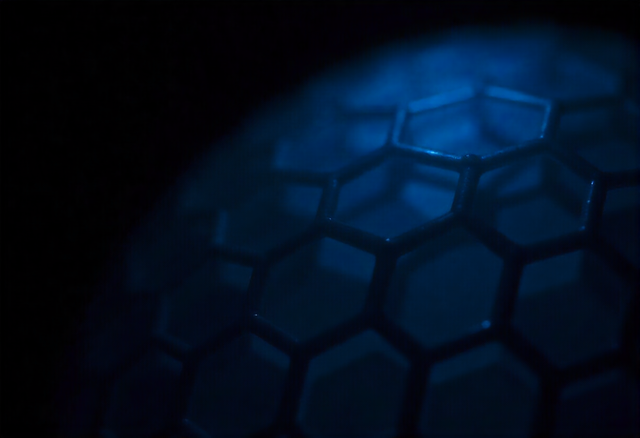

What will be the next frontier in robotics? To answer this question, let's take a look at this robot dog, which is guided by a mini human brain grown in a laboratory. Researchers in the United States demonstrated this by attaching brain organoids, tiny 3D models of the brain, to a graphene interface and using light to stimulate and read neural responses in real time, showing that these robots can create a kind of symbiosis between organic matter and silicon.

The organoid tends to learn and reorganize connections, neuroplasticity, something that traditional chips do not do naturally. This opens up hypotheses for more responsive prosthetics, adaptive robotics, and even biological computing based on living tissue.